实现分布式锁的各种姿势

各位Javaer都对锁应该都是不陌生的,无论工作还是面试的时候,都是很常见的。不过对于大部分的小型的项目,也就是单机应用,基本都是使用Java的juc即可应对,但是随着应用规模的扩大,在分布式系统中,依靠着诸如syncronized,lock这些就无法应对了。那么本文就是来给大家唠嗑唠嗑在分布式系统中常见的几种实现分布式锁的方式。

数据库方式实现分布式锁

首先从大家最最熟悉的数据库来说,这里使用的是MySQL数据库,其他数据库可能有所区别但大致的思想是差不多的。这里我就默认大家对事务的ACID以及隔离机制都是了解的,就不多说废话了。

在单机应用下我们使用锁比如sync,就是锁住某个资源保证同一时刻只能有一个线程去操作它,那么在分布式系统中,同样的,在同一时刻要保证只能被一台机器上的一个线程进行处理。

表记录方式

最简单的方式来实现这样的一个锁,我们可以设计下面这个表

create table lock_table

(

id int auto_increment comment '主键',

value varchar(64) null comment '要锁住的资源',

constraint lock_table_pk

primary key (id)

);

create unique index lock_table_value_uindex

on lock_table (value);可以看到,这里的value字段是unique的,那么我们需要获取锁的时候,就往这个表里面插入一条数据,插入成功则可以认定为获取到了锁,如果此时有另外的事务也去插入同样的数据,则会插入失败。如果需要释放锁,则delete这条数据即可。

显而易见,这样的设计存在几个问题:

- 不可重入,同一个事务在没有释放锁之前无法再次获得该锁

- 没有失效时间,一旦解锁失败则会一直保留着这把锁

- 非阻塞的,没有获得锁的现场一旦失败不会等待,要想再次获得必须重新出发获取锁的操作

- 强依赖数据库的可用性,数据库一旦挂掉会导致业务不可用

不过这些问题都是有办法解决的

- 加上一个count字段和一个记录请求ID的字段,同一个请求再次进来只是增加count,而不是插入

- 加上失效时间字段,额外通过定时任务进行数据清除

- 加上while循环等手段不断触发获取锁

- 数据库设置备用库,避免只有单个节点,保证高可用

上述这个方法虽然可行而且简单,但如果不断有很多事务竞争锁,那么就会不断的出现获取锁失败然后异常的情况,它本身的性能显然是不怎么样的,其实正常情况下我们都不会使用这种方式。

乐观锁方式

对于数据库中我们对于锁的分类有一种分类方式就是乐观锁和悲观锁,所谓乐观锁,顾名思义,就是总是假设最好的情况,每次去拿数据的时候都认为别人不会修改,所以不会上锁,但是在更新的时候会判断一下在此期间别人有没有去更新这个数据,可以使用版本号机制和CAS算法实现。在这里,就可以在表上再加一个自带version,每次更新的时候都带上这个版本号。比如下面这张表:

create table lock_table

(

id int auto_increment comment '主键',

value varchar(64) null comment '要锁住的资源',

version int default 0 null comment '版本号'

constraint lock_table_pk

primary key (id)

);一般情况下,如果没有版本号,我们修改的语句应当是

update lock_table set value = #{newValue} where id = #{id};但是,在并发情况下,当我们set新的value时,可能老的value已经被修改了。MySQL默认的事务隔离机制是RR,也就是可重复读,一个事务多次读取到的数据都是一样的,那么其他事务对这条事务的修改并不会影响到他读到的数据abc。这就导致了更新时候的值变化可能不再是abc->newValue而可能是: unknownValue->newValue,我们也可以把这个现象称为丢失修改,这也是并发事务可能带来的问题之一。

加上版本号后,再来更新value值的sql就会变成

update lock_table set value = #{newValue}, version = #{version} + 1 where id = #{id} and version = #{version}; 如果遇到被其他事务修改的情况时,由于我们拿到的是老的版本号,更新的时候必然找不到对应的数据,因此会更新失败。

乐观锁在检测数据冲突时不像表记录方式依赖数据库本身的锁机制,因此不会影响性能。但它需要对表新增额外字段,增加了数据库设计的冗余。而且当并发量高的时候,version的值会频繁变化,也会导致大量请求失败,影响系统的可用性。所以乐观锁一般用在读多写少且并发量不是很高的场景下。

悲观锁

对于悲观锁,意思也很好理解,就是总是假设最坏的情况,每次去拿数据的时候都认为别人会修改,所以每次在拿数据的时候都会上锁,这样别人想拿这个数据就会阻塞直到它拿到锁。要使用悲观锁需要关闭MySQL的默认自动提交模式autocommit,也就是set autocommit=0。悲观锁的实现有两种,一种是共享锁,它指的是对于多个不同的事务,对同一个资源共享同一个锁。通过在执行语句后面加上lock in share mode就代表对某些资源加上共享锁了。在读操作之前要申请获取共享锁,如果加锁成功,其它事务可以继续加共享锁,但是不能加排它锁。另一种就是排他锁了,就是指对于多个不同的事务,对同一个资源只能有一把锁。与共享锁类型,在需要执行的语句后面加上for update就可以了。对于上述场景,我们查询这条数据的时候的语句就是

select id, value from lock_table where id = #{id} for update;这个时候其他事务如果对这条数据进行修改则会阻塞,直到当前事务提交结束,这样可以保证数据的安全性。所以悲观锁的缺点就是每次请求都会额外产生加锁的开销且未获取到锁的请求将会阻塞等待锁的获取,在高并发环境下,容易造成大量请求阻塞,影响系统可用性。

此外如果这条查询未指定主键(或者索引),或者主键不明确(如id>0),且能查到数据。那么就会触发表锁。不过虽然我们指定主键是使用行锁,可MySQL有时候会优化判断使用索引比全表扫描更慢,则不使用索引,这种情况一般出现在表数据比较小的时候。

使用Redis实现分布式锁

接下来我们开始谈谈redis实现分布式锁,这个比上述使用数据库的方式要更常见的多,毕竟数据库还是很脆弱的,把高并发的压力放到数据库是很容易使数据库崩溃的。使用redis实现分布式锁最常见的当然是setnx指令,这个指令就是set if not exists的意思,如下所示,如果key不存在则set(返回1),否则就失败(返回0)。

127.0.0.1:6379> setnx name aa

(integer) 1

127.0.0.1:6379> get name

"aa"

127.0.0.1:6379> setnx name bb

(integer) 0由于锁一般都需要设置失效时间,可以使用expire来设置失效时间。但很明显,如果是先setnx再expire,由于这两个操作是分开的,不具有原子性,在设置失效时间的时候若出现一些异常情况导致指令没执行或执行失败,那么锁就一直无法释放。解决这个问题有两种方法,一个是使用Lua脚本,使得同时包含setnx和expire两个指令,Lua指令可以保证这两个操作是原子的,所以能保证两者要么同时成功或者同时失败。还有一种方法就是使用另一个命令

set key value [EX seconds][PX milliseconds][NX|XX]- EX seconds: 设定过期时间,单位为秒

- PX milliseconds: 设定过期时间,单位为毫秒

- NX: 仅当key不存在时设置值

- XX: 仅当key存在时设置值

第二种方法是相对更常见也是比较推荐的,但它依然不是绝对安全的。举个例子,比如线程A获取到了锁,锁的key叫做lock_key,失效时间是10s。A拿着锁去干活,到了10s的时候锁要释放了,但A干活太慢了还没干完,由于失效时间到了,B线程能成功获取锁。到了11s的时候,A的活干完了,要释放锁了,但这个时候的锁实际上是B的,它就把B的锁给释放了。这就造成在第10-11s的时候实际上是两个线程同时持有了锁并在执行自己的业务代码,同时出现了锁错误释放的问题。伪码如下:

// 加锁

redisService.set("lock_key", requestId, 10);

// 业务代码

dosomething();

// 释放锁

redisService.release("lock_key")上述问题中错误释放锁问题的关键点显然在于释放锁的时候是直接根据key删除了,但没有判断这个key还是不是它自己的。那么如果再删除的时候再判断一下它的value还是不是自己的,就可以防止误释放的情况了。同样这里也可以使用Lua脚本来控制这个逻辑判断,这是因为我们要先判断是否这个key的value是否是原来赋予的,然后再删除,这是两个步骤,要保证原子性,因此使用Lua来处理。脚本怎么写大家可以自行查一下,我本人说实话目前还没研究过Lua,也不从别人的文章里面拷贝了。有兴趣的自己查询下。

至于上述的另一个问题就是在第10-11s之间出现了两个线程同时持有锁的情况,这就不符合我们一开始的需求了,既然设计了锁必然是希望同一时间只能有一个线程持有,那么如果我们持有锁的有效期内还没处理完业务的话,是需要把锁的时间延长到业务处理完再释放比较合适的。这个时候我们就要用到redis常用的一个工具-Redisson了。它可以使用一个叫做看门狗机制的方式来延迟锁的释放。要想使用它只需要设置lockWatchdogTimeout这个属性即可,默认是3000ms。这个参数只用在锁没有设置leaseTimeout这个参数的情况下,所以注意我们不能使用lock()方法,而是使用tryLock()方法,如下所示,它的底层实现中leaseTime传的是-1就明白了。

public RFuture<Boolean> tryLockAsync(long threadId) {

// 其中第二个参数就是leaseTime

return this.tryAcquireOnceAsync(-1L, -1L, (TimeUnit)null, threadId)

}下面是Redisson文档里对这个参数的解释,注意最终这个锁肯定是会被释放的,所以它并不能绝对的保证不会同时有两个线程拿到锁,默认是30s,如果到60s线程还没有把锁放开,它会主动将这个锁失效。但实际生产中如果一个业务持有锁持有了一分钟还没处理完,得考虑下是加长失效时间还是代码本身或环境不稳定的问题了。

lockWatchdogTimeout

Default value:

30000Lock watchdog timeout in milliseconds. This parameter is only used if lock has been acquired without

leaseTimeoutparameter definition. Lock will be expired afterlockWatchdogTimeoutif watchdog didn’t extend it to the nextlockWatchdogTimeouttime interval. This prevents against infinity locked locks due to Redisson client crush or any other reason when lock can’t be released in proper way.

除了上面这个误删除的问题,其实还有个问题。我们平时使用redis的时候,往往不是只有一台机器,都是集群的。比如哨兵模式下,master节点上拿到了某个锁,但突然master节点宕机了,此时会进行故障转移,某个slave会升级为新的master,那这个slave也会拿到同样的锁,这就导致了有两个客户端同时持有了统一把锁。所以对于redis分布式锁,redis的官网上有关于redis实现分布式锁的文章介绍。核心算法叫做RedLock算法。它首先假设有5个独立的Redis master节点,它们分布在不同的机器上或虚拟机上。然后获取客户端获取锁的步骤如下:

- 获取当前毫秒时间戳

- 从这5个实例中依次尝试获取锁,使用相同的key和随机的value。在这一步骤中,当我们在每个实例里请求锁时,每个客户端都要设置一个比锁的释放时间要小的超时时间。比如锁的自动释放时间是10s,那么超时时间可以设置为5~50毫秒。这个可以阻止客户从剩下的已经阻塞的实例里面不断的尝试获取锁。如果一个实例不可用,我们应当尝试尽快去连接下一个实例。

- 客户端计算获取锁花费的时间,即计算当前时间和第一步得到的时间的差值。当且仅当客户端能在大部分实例(N/2 +1,这里是N=5所以指的是3),并且总的获取锁时间时小于锁的有效时间,这个锁才认为是成功获取了。

- 如果成功获取到锁了,它的实际有效时间就被认为是初始的有效时间减去第3步计算出的花费时间

- 如果客户端因为某些原因获取锁失败了。(比如没有N/2+1的实例获取到锁或最终有效时间是负值),那么此时就会尝试将所有实例上的锁进行释放(即使某些实例并没有锁)

Redisson也是有红锁的实现算法RedissonRedLock,但它对性能的影响很大,如非必要,一般不采取这种手段,可能会通过修改业务的设计或采用其他技术方案来解决这种极端

使用ZooKeeper实现分布式锁

接下来要介绍的是最后一个实现分布式锁的方式,ZooKeeper。这个大家肯定也是很熟悉的了,但对于很多开发而言,这个都是用来配合dubbo当作注册中心使用的。然而它的功能远不止于此。它实际上是一个典型的分布式数据一致性解决方案,分布式应用程序可以基于它实现诸如数据发布/订阅、负载均衡、命名服务、分布式协调/通知、集群管理、master选举、分布式锁和分布式队列等功能。这里我先讲两个后面介绍实现分布式锁会提及的基础概念。当然ZooKeeper还有很多其他的概念和知识点,这不是本文的重点也就不说了。

基础概念

数据节点znode

一个是数据节点znode。其实在Zookeeper中,节点是有两类的。一类是构成集群的机器,我们称之为机器节点;另一类就是所谓的数据节点znode,它指的是数据模型中的数据单元。ZooKeeper将所有数据存储在内存中,数据模型是一棵树,由斜杠进行分割的路径,就是一个znode。每个znode上都会保存自己的数据内容,同时还会保存一系列属性信息。

同时znode还可以分为两类,一类是持久节点,指的是一旦这个znode被创建了,除非主动进行znode的移除操作,否则这个znode将一直保存在ZooKeeper上。另一类是临时节点,顾名思义,它的生命周期和客户端会话绑定,一旦客户端会话失效,那么这个客户端创建的所有临时节点都会被移除。

另外,ZooKeeper允许用户为每个节点添加一个特殊的属性:SEQUENTIAL。一旦节点被标记上这个属性,那么这个节点被创建的时候,ZooKeeper会自动在其节点名后面追加一个整型数字,这个整型数字是一个由父节点维护的自增数字。也就意味着无论是持久节点还是临时节点,都可以设置成有序的,即持久顺序节点和临时顺序节点。

版本version

对于每个znode,ZooKeeper都会为其维护一个叫做Stat的数据结构,Stat中记录了这个ZNode的三个数据版本,分别是version(当前znode的版本)、cversion(当前znode子节点的版本)、和aversion(当前znode的ACL(Access Control Lists, Zookeeper的权限控制策略)版本)。

事件监听器Watcher

另一个概念是Watcher,事件监听器。ZooKeeper允许用户在指定节点上注册一些Watcher,并且在一些特定事件触发的时候,ZooKeeper服务端会将事件通知到感兴趣的客户端上去,该机制是ZooKeeper实现分布式协调服务的重要特性。

锁的实现方式

介绍完基础概念我们就开始来使用ZooKeeper实现分布式锁。ZooKeeper是通过数据节点来表示一个锁,接下来就开始谈谈ZooKeeper实现锁的几种方式,也按照乐观锁和悲观锁的分类来实现。

乐观锁

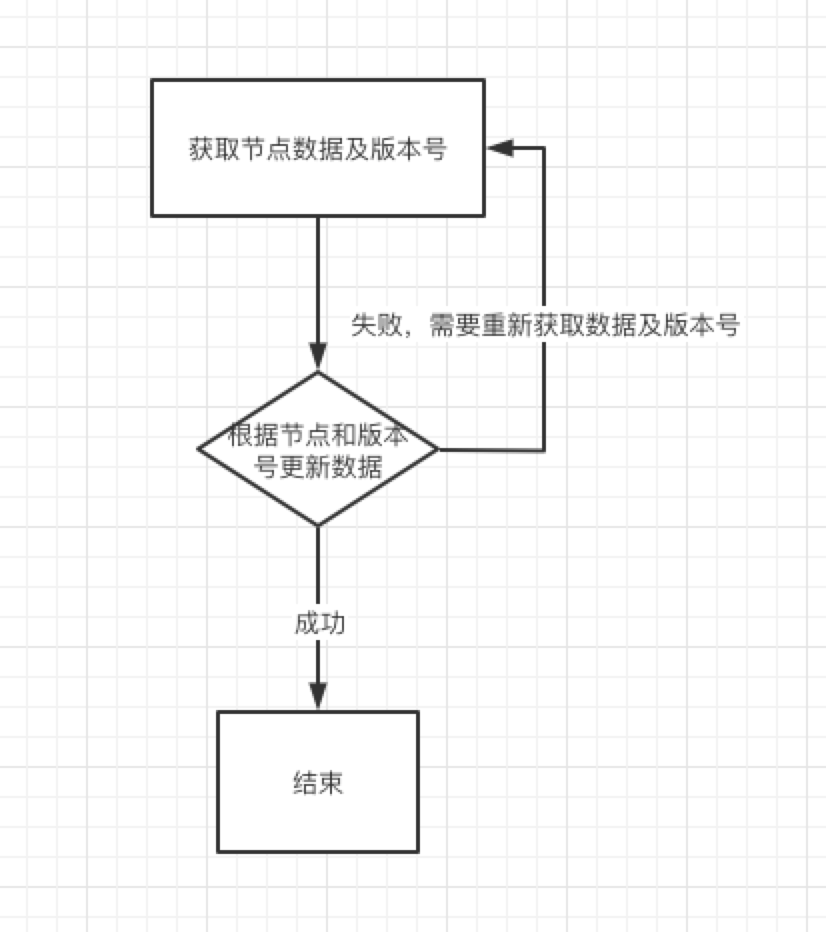

乐观锁的主要理论就是使用CAS算法,因此同样的,使用ZooKeeper也是要利用到版本号的概念。

每次更新数据的时候都会带上版本号,如果版本号和当前不一致则会更新失败,需要再次去尝试去更新,这个部分的逻辑是比较简单的就不过多描述。

悲观锁

排他锁

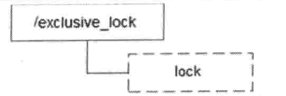

排他锁的概念上面介绍数据库实现分布式锁的时候就有说明,它也可以称为写锁和独占锁,是一种基本的锁类型。如果事务T1对数据对象O1加上了排他锁,那么在整个加锁期间,只允许事务T1对O1进行读取和更新操作,其他任何事务都不能在对这个数据对象进行任何类型的操作,知道T1释放了排他锁。如图为排他锁的示意图:

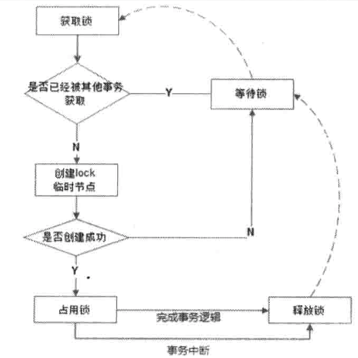

在需要获取排他锁时候,所有的客户端都会试图调用create()接口,在/exclusive_lock节点下创建临时子节点/exclusive_lock/lock。ZooKeeper会保证在所有客户端中,最终只有一个客户端能够创建成功,那么就可以认为该客户端获取了锁。同时,所有没有获取到锁的客户端就需要到/exclusive_lock节点上注册一个子节点变更的Watcher监听,以便实时监听到lock节点的变更情况。

释放锁有两种情况,一种是当前获取锁的客户端机器发生宕机,那么ZooKeeper上的这个临时节点就会被移除。另一种情况是正常执行完业务逻辑后,客户端就会主动将自己创建的临时节点删除。无论什么情况下移除了lock节点,ZooKeeper都会通知所有在/exclusive_lock节点上注册了子节点变更Watcher监听的客户端。这些客户端在接受到通知后,再次重新发起分布式锁获取,即重复“获取锁”过程。

总的来说,排他锁的整个流程可以用下图来表示:

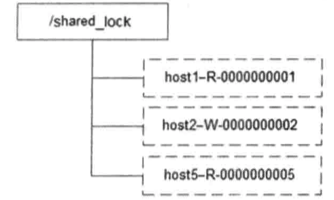

共享锁

接下来再讲讲共享锁,又成为读锁。如果事务T1对数据对象O1加上了共享锁,那么当前事务只能对O1进行读取操作,其他事务也只能对这个数据对象加共享锁,直到该数据对象上的所有共享锁都被释放。和排他锁一样,同样是通过ZooKeeper上的数据节点表示一个锁,是一个类似与"/shared_lock/[Hostname]-请求类型-序号"的临时顺序节点,那么这个节点就代表了一个共享锁。

在需要获取共享锁时,所有客户端都会到/shared_lock这个节点下面创建一个临时顺序节点,如果是读请求,那么就创建例如/shared_lock/192.168.0.1-R-000000001的节点,如果是写请求,那么就创建例如shared_lock/192.168.0.1-W-000000001的节点。共享锁在同一时刻可以有多个事务对同一个数据进行读操作,但不可同时进行写。

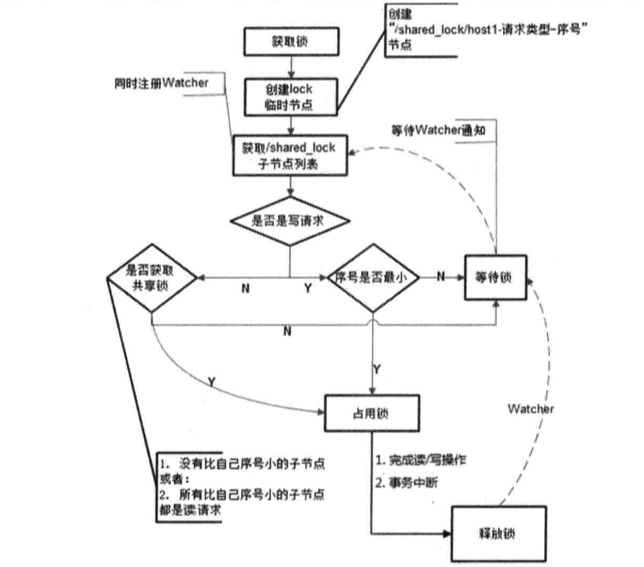

关于这部分的实现逻辑我按照《从Paxos到ZooKeeper分布式一致性原理与实践》这本书(zookeeper这部分的大部分内容都来自于这本书)的描述是如下所示

- 创建完节点后,获取/shared_lock节点下的所有子节点,并对该节点注册子节点变更的Watcher监听

- 确定自己的节点序号在所有子节点中的顺序

- 对于读请求:

- 如果没有比自己序号小的子节点,或是所有比自己序号小的子节点都是读请求,那么表明自己已经成功获取到了共享锁,同时开始执行读取逻辑

- 如果比自己序号小的子节点中有写请求,那么就需要进入等待。

- 对于写请求

- 如果自己不是序号最小的子节点,那么就需要进入等待

- 接收到Watcher通知后,重复步骤1

- 删除锁的逻辑和排他锁一样,这里就不说了

下面是共享锁的流程图,但在我尝试写这部分代码的时候发现有个问题,就是我如果一开始就挂上了子节点列表的变更监听,那么就算某个线程抢到了锁,那么它释放的时候也会触发自己挂上的监听,导致它本身的业务又执行了一次。不知道是不是我代码实现的问题还是本身这个逻辑不合适,我把注册监听的代码放在了获取锁失败的地方程序运行就没有问题,这个希望有兴趣的童鞋可以自己研究研究。

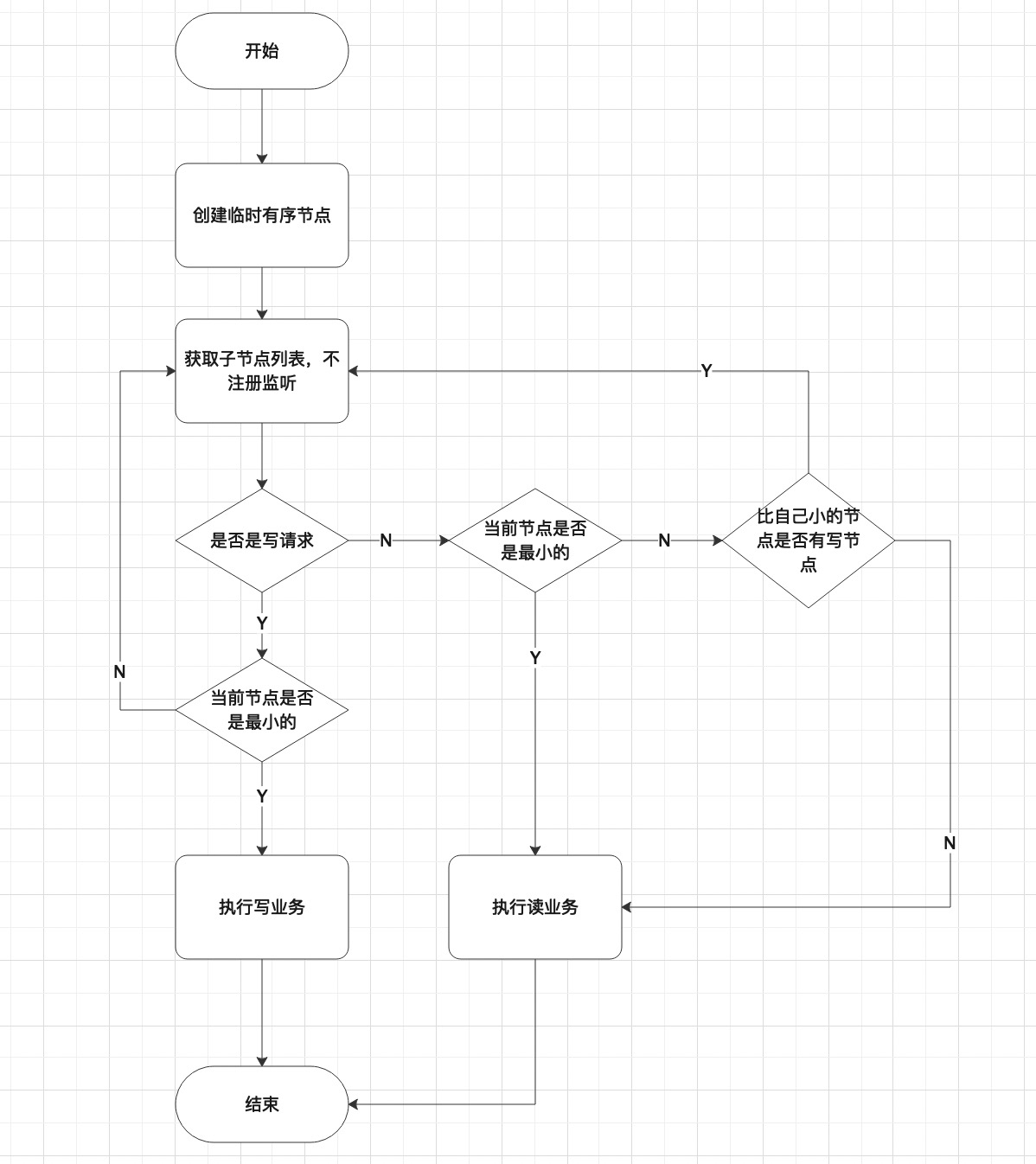

因此我自己按照自己的想法写了个新的逻辑,流程图如下,但这个逻辑实际上不会产生后面我要说的羊群效应,只是它会出现大量的争抢锁失败然后不断去争取的状况。

羊群效应

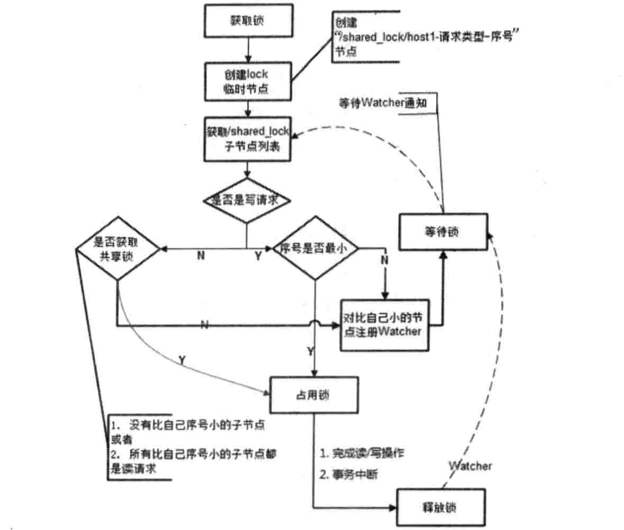

通过上述的逻辑描述,可以发现在整个分布式锁的竞争过程中,大量的“Watcher通知”和“子节点列表获取”两个操作重复运行,并且绝大多数的运行结果都是判断出自己并非是序号最小的节点,从而继续等待下一次通知。如果同一时间有多个节点对应的客户端完成事务或是事务中断引起节点消失,ZooKeeper服务器就会在短时间内向其余客户端发送大量的事件通知,这就是所谓的羊群效应。然而客户端真正的关注点在于判断自己是否是所有子节点中序号最小的。因此每个节点对应的客户端只需要关注比自己序号小的那个相关节点的变更情况就可以了。那么基于这个思想,我们可以对算法进行改进,同样的,我也是先列出书上的步骤:

- 客户端调用create()方法创建一个类似于"/shared_lock/[Hostname]-请求类型-序号"的临时顺序节点

- 客户端调用getChildren()接口来获取所有已经创建的子节点列表,注意,这里不注册任何Watcher

- 如果无法获取共享锁,那么就调用exist()来对比自己小的那个节点注册Watcher。注意这里“比自己小的节点”只是个笼统的说法,具体对于读请求和写请求不一样

- 读请求:向比自己序号小的最后一个写请求节点注册Watcher监听

- 写请求:向比自己序号小的最后一个节点注册Watcher监听

- 等待Watcher通知,继续进入步骤2

流程图如下:

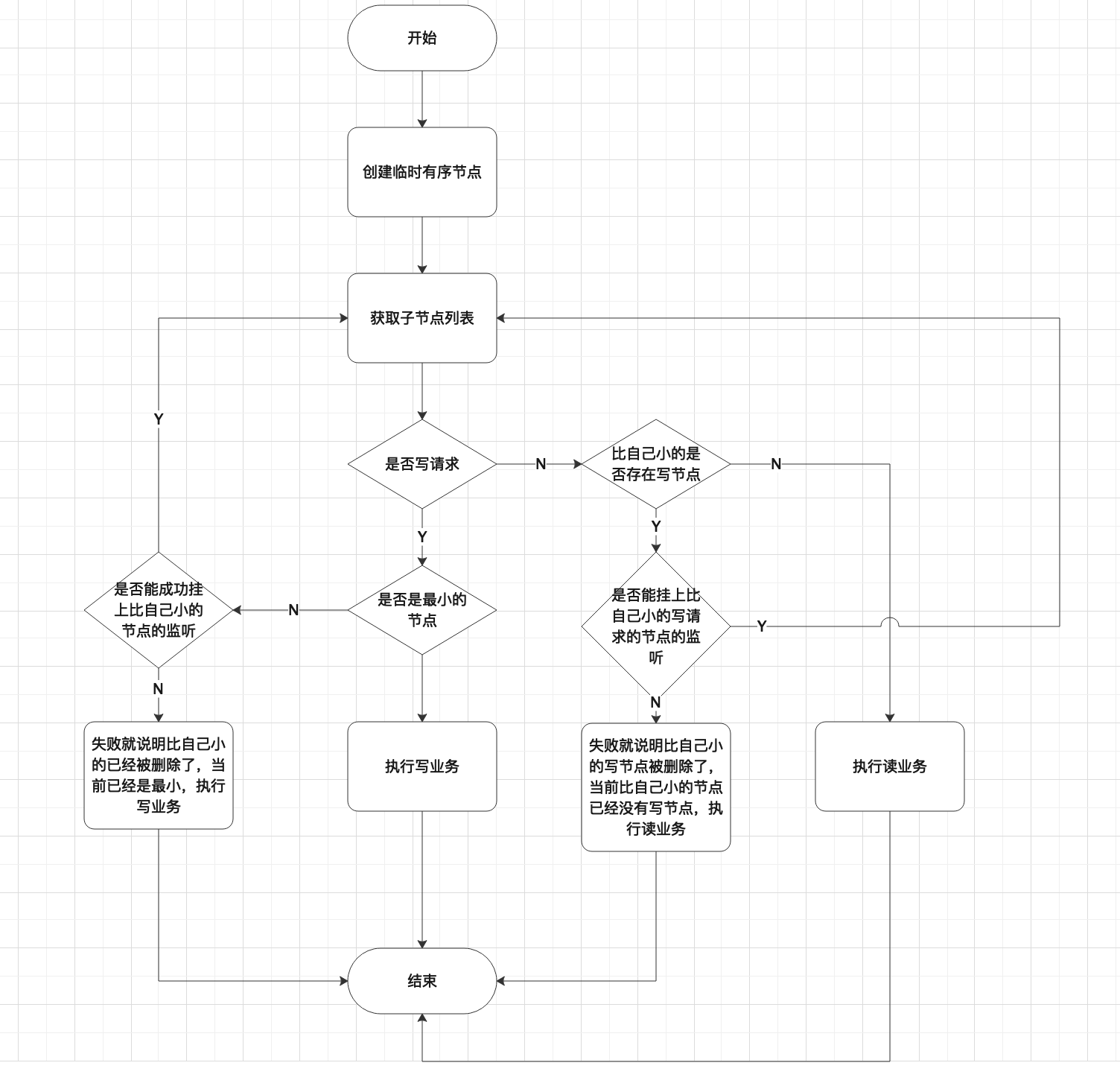

那么上述流程中我的疑惑是若现在有两个线程AB同时进来了,假设都是写请求,A序号最小,占用锁,B需要对比自己小的节点注册监听。但若在监听还没注册上的时候A已经完成了业务操作,B可能就挂不上监听。那么我觉得,此时应该尝试再次去获取锁。所以以下是我设计的流程图。

综上,zookeeper的共享锁的实现方案虽然我自己的理解和书上有所差异,但思想上我觉得还是接近的,在平时工作中,我们如果真的需要使用的话,应该是使用curator框架比较多,这个后面我有空会在研究下这个框架的使用方法和一些底层逻辑可能会(那就是不会)再写点文章啥的。

对于ZooKeeper实现分布式锁这块,相对于其他的两种实现方式要稍微复杂一点,但它相对于Redis而言要更可靠,Redis的RedLock算法也不是百分百保证可靠,但ZooKeeper由于它是根据节点的创建删除来实现锁,宕机时能自动删除节点,所以不会存在像Redis那种由于宕机导致的问题。但大部分情况下我们还是并不需要那么严格的需求,所以其实Redis分布式锁是我目前工作中见的最多的。

最后本人zookeeper实现分布式锁的代码因为太长了所以放在github上了,包括实现部分和测试代码,如果有什么问题希望可以评论指出来(真心的,因为上面共享锁的部分纠结了我半个月了,很希望有个大牛能帮我看看是我自己的问题还是只是单纯书上没写的太严谨)。