深度卷积神经网络案例研究

在深度学习快速发展的时代,各种创新型的神经网络架构层出不穷。要想跟着时代的发展,对于这些案例的研究是很有必要的。本篇博客将基于Andrew Ng教授的深度学习专项课程第四门课程的第二周内容来针对卷积神经网络的一些案例进行介绍。

案例研究的意义

首先思考一个问题,我们为什么需要研究这些案例呢?

- 首先,这些案例承载了前人在网络设计中积累的知识和经验。通过学习案例,我们可以直观地领会成功的设计思路,对如何设计网络结构有更深刻的理解。

- 其次,优秀的网络架构往往具有可迁移性。AlexNet解决ImageNet分类问题的结构,也可应用到其他视觉任务上。学习这些架构可以帮助我们设计通用的模型。

- 再次,研读论文是提高自身的一个途径。理解前沿的技术发展有助于开拓视野,锻炼分析和学习能力。即使不从事视觉研究,这种能力也可迁移到其他领域。

- 最后,这些案例来自顶尖的研究人员,其中的思路尖端且独特。学习案例不仅可以帮助我们构建模型,也能开阔思路,激发我们的创造力。

因此,深入理解经典的深度学习网络案例,将会对我们有重大的启发和帮助。下面让我们来看其中的一些重要案例。

图像分类领域的经典网络

近年来图像分类领域出现了许多划时代的新模型,比如LeNet-5,AlexNet 和VGGNet。

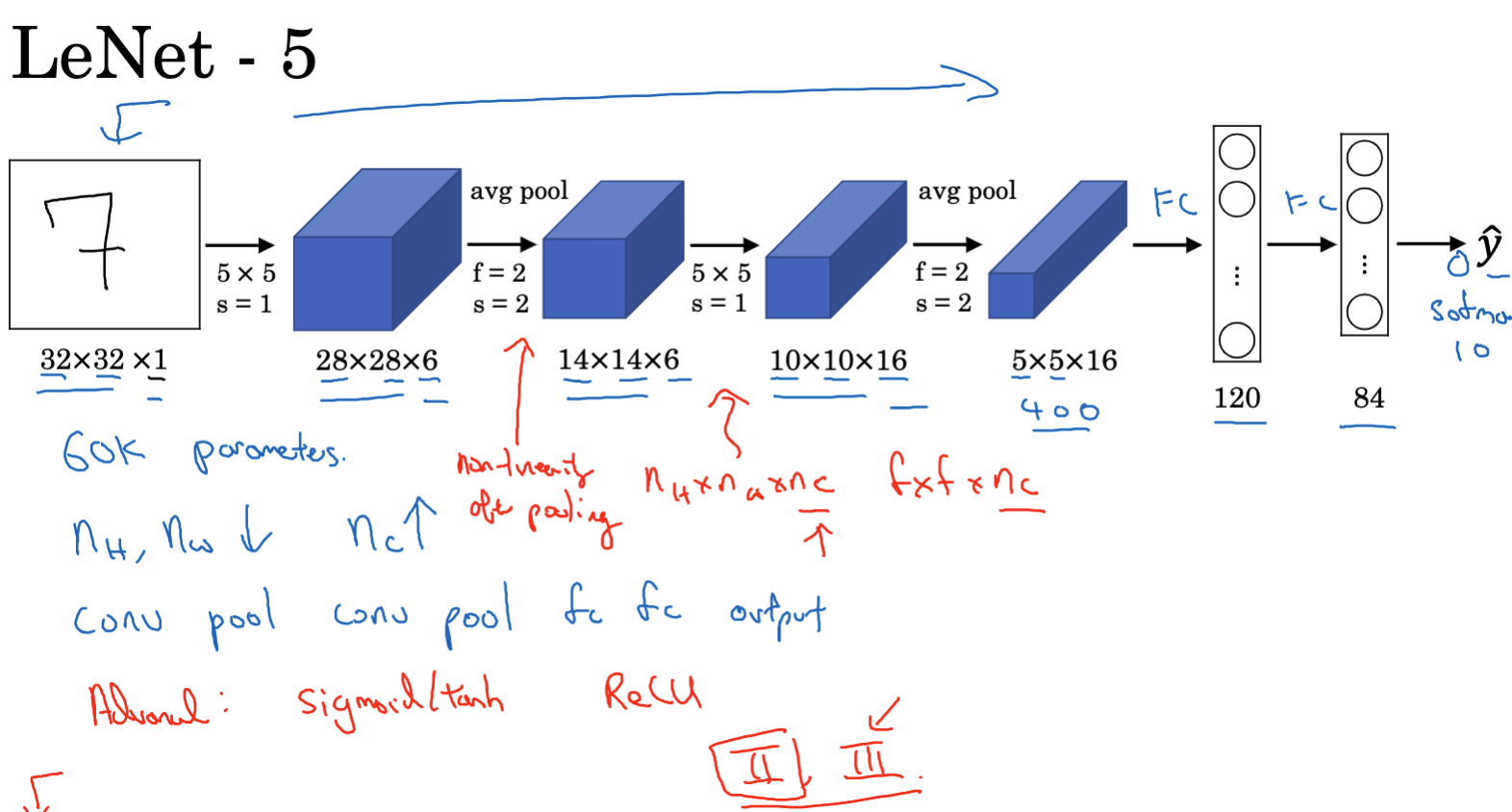

LeNet-5 是由 Yann LeCun 于1998年提出的,是早期用于数字识别(特别是手写数字识别)的一种卷积神经网络(CNN)结构。这个网络模型在当时达到了非常高的识别准确度,并且对后来的卷积神经网络架构有很大影响。下面是LeNet-5的一些关键组成部分:

- 输入层: 接收一个大小为 32x32x1 的灰度图像。这里的 1 指的是通道数,因为是灰度图像,所以通道数为1。

- 第一层(卷积层): 使用6个 5x5 的卷积核,步长为1,无零填充。这将输出一个 28x28x6 的特征图。

- 第二层(池化层/下采样层): 使用2x2 的滤波器进行平均池化,步长为2。这会将图像大小减小为 14x14x6。

- 第三层(卷积层): 使用16个 5x5 的卷积核,步长为1,无零填充。输出尺寸为 10x10x16。

- 第四层(池化层/下采样层): 使用2x2 的滤波器进行平均池化,步长为2。输出尺寸为 5x5x16。

- 全连接层: 在几个卷积层和池化层之后,LeNet-5 使用了几个全连接层。首先,5x5x16 的输出被展平成 400 个节点,然后连接到一个包含 120 个节点的全连接层,然后是一个包含 84 个节点的全连接层。

- 输出层: 最后一层是一个10个节点的输出层,对应0-9的手写数字。

LeNet-5有下面几个特点:

- 平均池化: 当时更常用的是平均池化而不是现在更常用的最大池化。

- 激活函数: 与现代网络不同,LeNet-5 更多地使用了 Sigmoid 或 Tanh 激活函数,而不是现在更常用的 ReLU。

- 参数数量: 网络相对较小,大约有 60,000 个参数。

LeNet-5 是卷积神经网络历史上的一个重要里程碑,因为它是第一个成功应用于数字识别任务的卷积神经网络。尽管现代的网络结构更为复杂和高效,但 LeNet-5 的基础设计仍然影响着今天的很多网络架构。

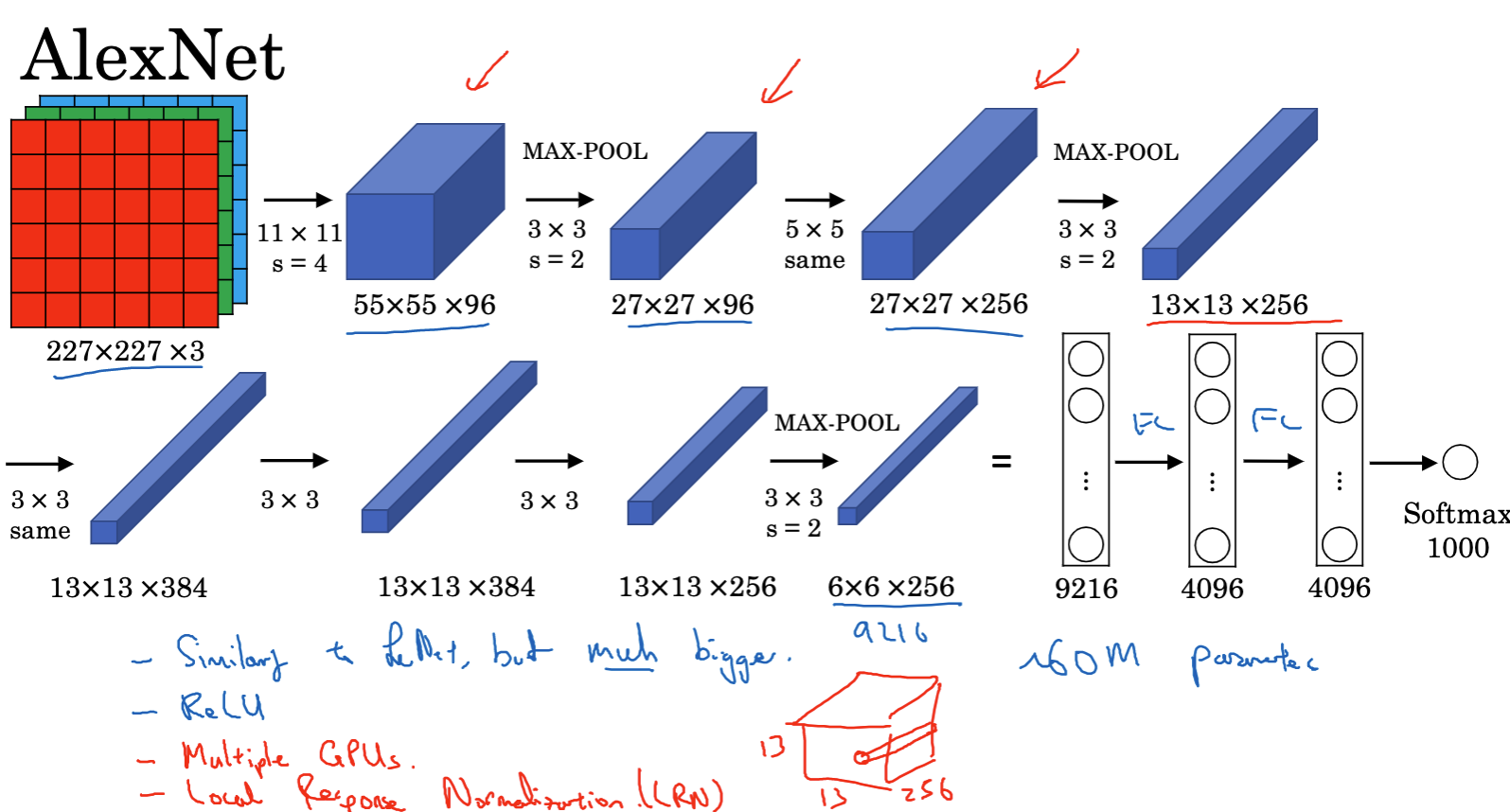

AlexNet 由Alex Krizhevsky等人于2012年提出,在当时1000类图像分类任务上取得了历史性的突破,它的结构主要包括:

- 输入图像的大小是227x227x3(注意,论文中提到的是224x224x3,但227x227可能更合理)。

- 第一层使用了96个11x11的滤波器,步长为4,得到了55x55的图像。

- 接下来是3x3的最大池化层,步长为2,结果的体积降到27x27x96。

- 接着进行相同的5x5卷积,得到27x27x256的输出。

- 进行最大池化操作后,高和宽都降至13。

- 通过连续进行3x3卷积操作,结果最终降至6x6x256。

- 展开这个结果,得到9216个节点,通过几层全连接层,最后用softmax输出结果(1000类中的某一类)。

- AlexNet的结构与LeNet相似,但参数数量从60,000增加到了大约6000万。

AlexNet展示了大规模深层卷积神经网络的强大能力,被视为深度学习领域的里程碑。

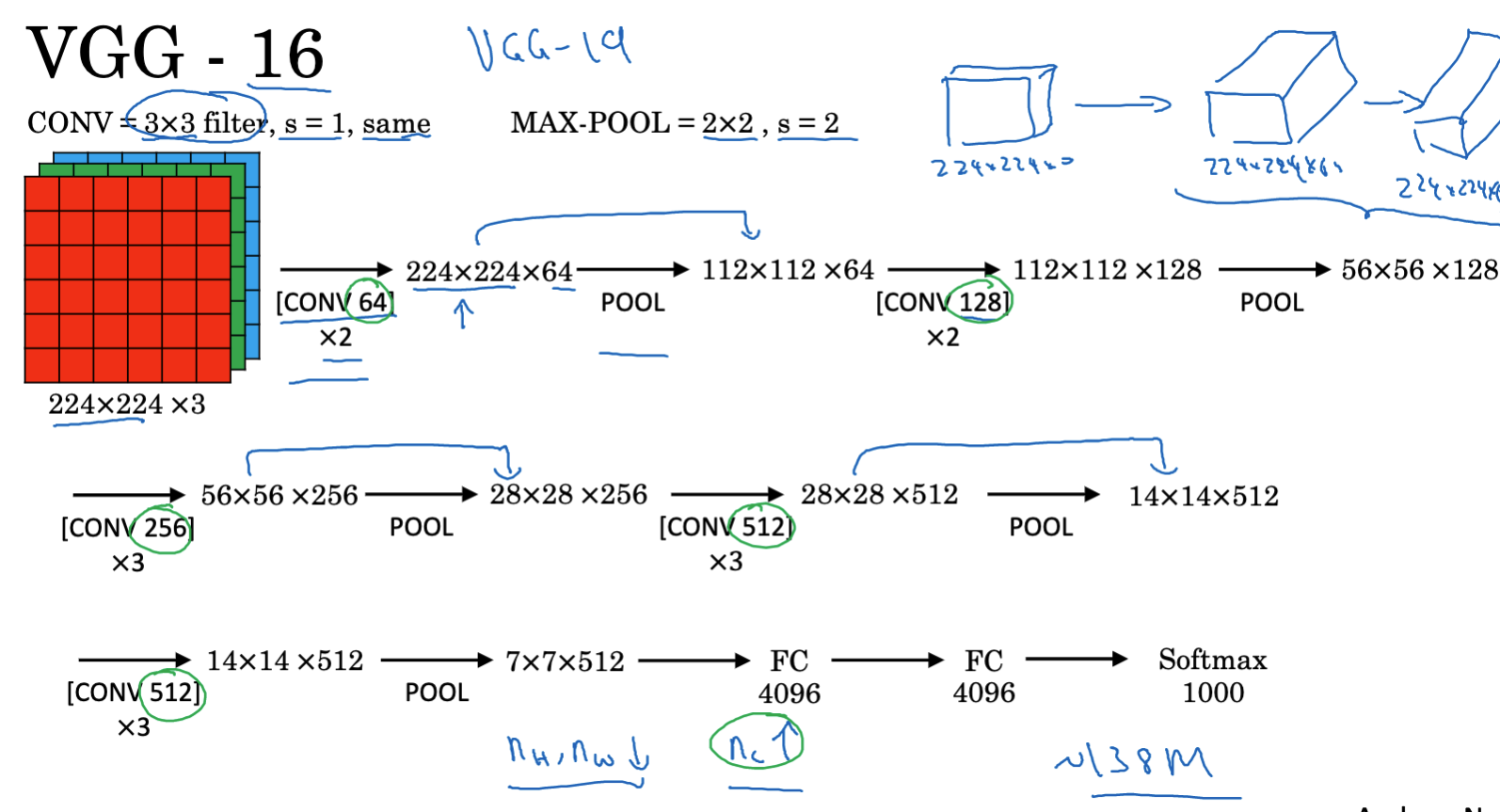

紧随其后的是 VGGNet,该网络的结构更简单,更注重卷积层,使得结构具有统一性,其中:

- 所有卷积滤波器都是3x3,步长为1,采用相同的填充。

- 所有最大池化层的滤波器都是2x2,步长为2。

- 该网络包含16层带权重的层,总共有约1.38亿个参数。

- 结构简洁明了,易于理解。尽管该网络训练参数的数量巨大,但因其结构统一性,仍然被广泛使用。

VGGNet的结构非常简洁易懂,也成为了后续网络设计的重要参考。这几个网络奠定了深度卷积神经网络在计算机视觉领域的地位。

VGGNet的结构非常简洁易懂,也成为了后续网络设计的重要参考。这几个网络奠定了深度卷积神经网络在计算机视觉领域的地位。

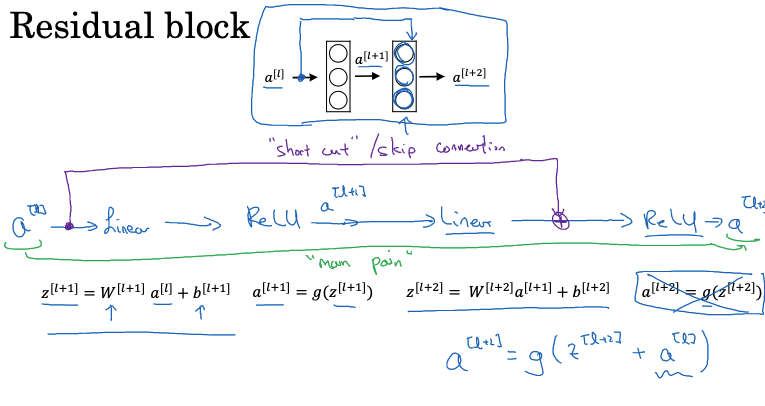

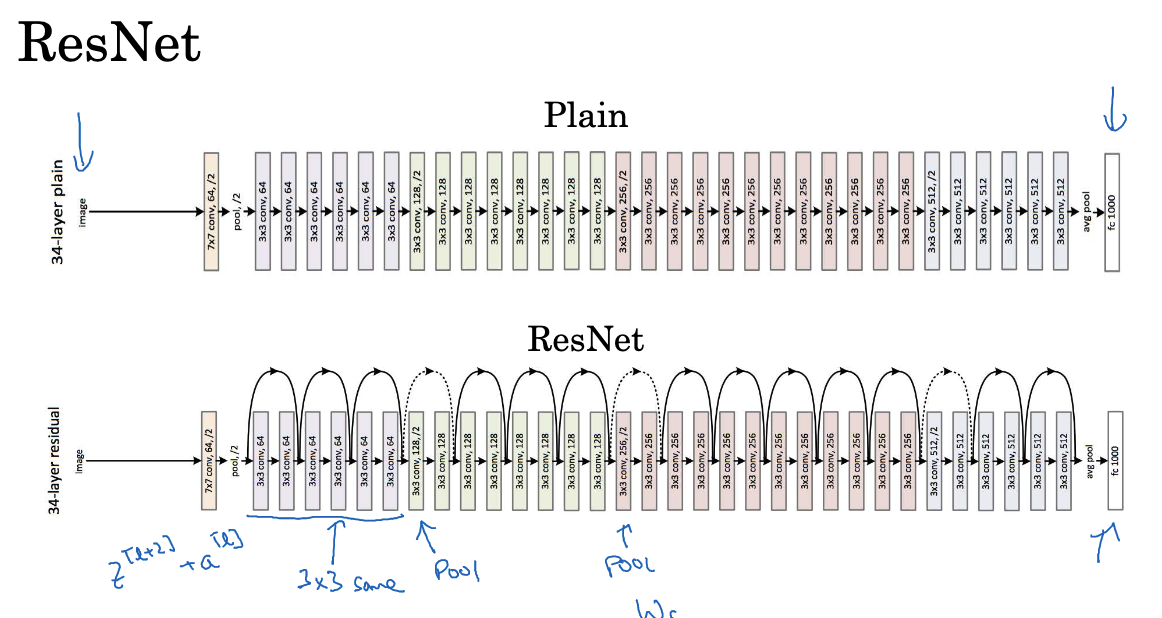

Residual Networks - 残差网络

随着模型的加深,梯度消失和梯度爆炸的问题逐渐凸显。残差网络(ResNet)应运而生,其关键创新是引入了一种称为“跳跃连接”的结构。所谓跳跃连接,是将前面层的输出通过一条“快速通道”直接传递到当前层之后。也就是说,当前层的输入不仅来自前一层,还包含了前面层未修改的信息。这种跳跃连接使得网络可以轻易地学习恒等函数,因为网络可以简单地将早期层的激活复制到更深的层。

这种结构的效果是,每一层只需要学习输入和输出的差异部分,也就是“残差”。如果要学习的是恒等函数,只需要将残差部分减去即可。残差结构包括主路径和快捷路径(或称跳跃连接)。信息可以通过快捷路径直接传递到更深的神经网络层,从而避免了信息经过主路径时可能出现的问题。通过堆叠大量的这些残差块,就可以构建一个深层网络,即残差网络。在神经网络的某一层添加快捷路径并用ReLU非线性处理后,这一层就变成了一个残差块。

在深度神经网络中,有时增加更多的层数并不会提高性能,反而可能会降低训练效果。这主要是因为当网络的层数增加时,选择参数来学习恒等函数变得更困难。但使用残差网络,即使网络非常深,也可以轻松学习一个“零映射”来传递信息。这大大降低了深度网络的训练难度。

在训练过程中,L2正则化会减小权重的大小。如果权重和偏差都被正则化到0,那么额外的层就能学习到恒等函数。在进行跳跃连接时,早期层和更深的层的维度需要匹配。如果维度不匹配,可以通过添加一个额外的矩阵进行调整。在ResNets中,大量使用了大小为3x3的相同卷积。这样做的目的是为了保证跳跃连接中输入和输出的维度相同,以便于进行加法运算。

在训练神经网络时,我们常常使用各种优化算法,如梯度下降法。在普通的深度神经网络(无残差块)中,训练误差在增加层数后会先降低,然后又会上升,这在实际应用中是不理想的。然而在使用了ResNet的网络中,即使增加了层数,训练误差也能继续下降。尽管可能在某一点会达到平原阶段,但是ResNet确实对训练深度网络有很大的帮助。

虽然现在有些人已经在进行超过1000层的网络的实验,但在实际应用中很少看到使用那么深的网络。然而,通过使用跳跃连接或快捷路径,我们可以连接中间层的激活输出到更后面的层,这对解决梯度消失和爆炸问题非常有帮助,使我们可以训练更深的神经网络而不会看到性能倒退。

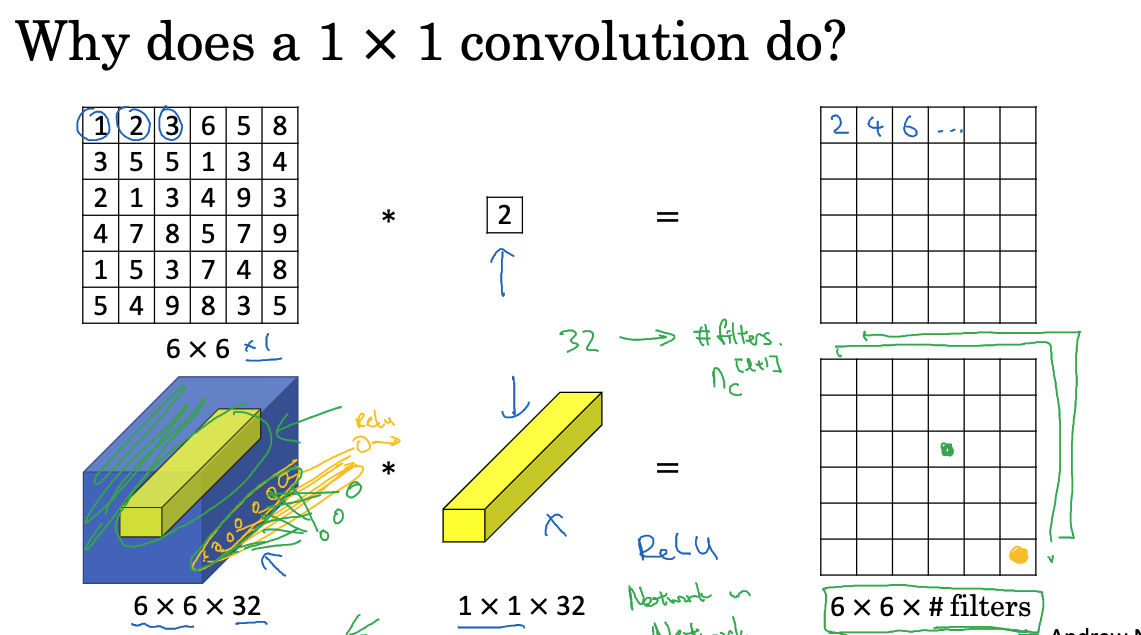

一对一卷积在网络中的应用

在设计内容架构时,一对一卷积是一个非常有用的概念。对于一维图像,一对一卷积看起来似乎没有特别的用途,只是将图像的每个元素乘以某个数字。然而,对于多维图像,例如6x6x32的图像,一对一卷积能够执行非常有意义的操作。

一对一卷积会查看图像中的每一个位置。然后,它会将每个位置的元素与滤波器中对应的元素相乘,并对结果应用ReLU非线性。这个过程可以看作是一个完全连接的神经网络,对于每个位置,都会输入32个数字(来自同一位置的32个通道),然后输出滤波器数量的输出。

一对一卷积看似简单,但对网络来说意义重大。主要体现在两个方面:

- 一是调整网络通道数。例如,输入是128通道的特征图,希望转化为512通道以供后续层使用。这时可以引入512个1x1x128的卷积核,输出即为512通道。

- 二是增加非线性。卷积层本质上是线性操作,而添加1x1卷积并传入ReLU等非线性激活函数,可以得到非线性映射。 Inception网络使用了大量1x1卷积,一方面将通道数减小以降低计算量,另一方面增加了网络的表达能力。

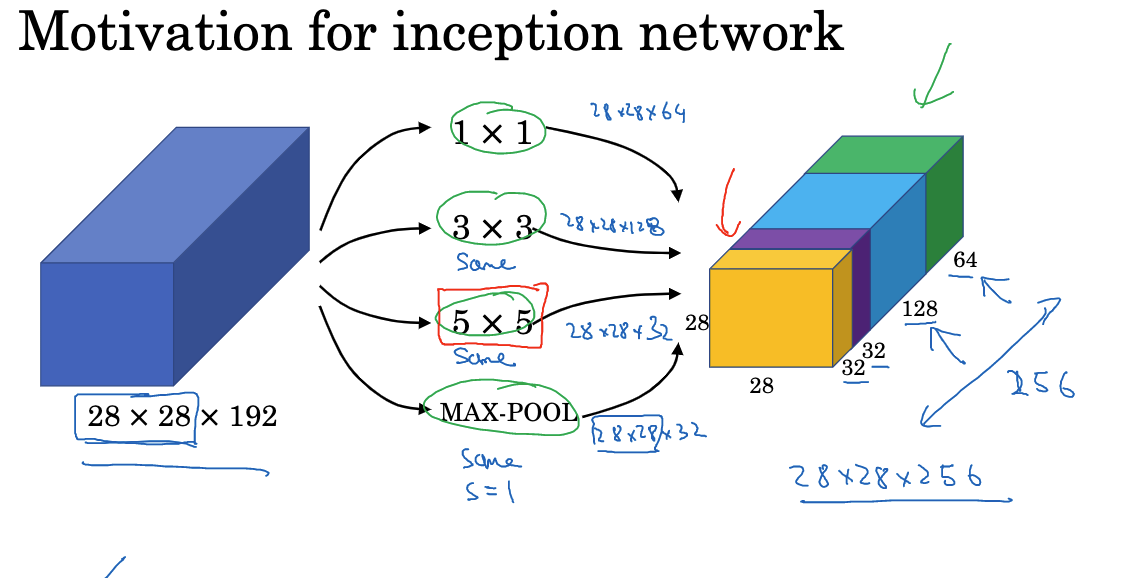

Inception 网络

Inception网络是一个卷积神经网络架构,由Google的研究团队,包括Christian Szegedy等人,于2014年引入。该网络架构改变了如何在深度网络中组织不同类型和尺寸的卷积核和池化层的传统思维。

传统的卷积神经网络中,设计每一层时都需要做出多个选择,例如使用哪种尺寸的卷积核(例如1x1、3x3、5x5等)或是否使用池化层。Inception网络提出了一种独特的解决方案:为什么不同时使用所有这些元素呢?这个观点虽然让网络架构变得复杂,但也增强了网络的表达能力。

在卷积神经网络中,你可以选择想要使用的卷积核尺寸,甚至选择是否需要卷积层或池化层。而Inception网络则选择同时使用所有这些元素。例如,1x1卷积核会输出28x28的结果,这是一个28x28x64大小的输出;你也可以尝试3x3的卷积核,然后得到28x28x128的结果;同样,你也可以尝试5x5的卷积核,得到28x28x32的结果;此外,你还可以尝试使用池化层。然后将这些不同的结果并排堆叠起来。

Inception网络中,需要对输出进行相应的填充以使得维度匹配,这也被称为same填充。这样做可以使得输出的维度仍然是28x28,与输入的高度和宽度相同。

虽然Inception模块非常强大,但它也增加了计算复杂性,特别是对于大尺寸的卷积核。为了解决这个问题,Inception网络使用所谓的“瓶颈层”来减小维度。具体来说,通过在更大的卷积操作之前使用1x1的卷积来减少深度(通道数),从而减小计算成本。

Inception模块首先使用不同大小的卷积核分别抽取特征,例如1x1、3x3和5x5卷积核。然后将所有特征在通道维度上拼接。同时,还引入了最大池化的并行分支。之所以这样设计,是因为不同大小的卷积核适合检测不同范围的特征。1x1卷积负责跨通道集成信息,3x3和5x5卷积负责在空间维度上检测局部特征。最大池化则保留了位置不变性。Inception模块中并行的多支路结构,使网络可以同时适应不同的特征信息。相比只选择一种卷积核,这种设计能够提取更丰富的特征,从而提升分类效果。后续的Inception V2, V3, V4等版本在此基础上进行了改进,引入批归一化、残差连接等机制来训练更深的Inception网络,并进一步扩大了网络的宽度。 Inception网络展示了通过结构创新来提升模型效果的设计思路。其多支路并行结构对许多后续网络产生了启发和借鉴。

有趣的是,这个网络模型的名称“Inception”来自于电影《盗梦空间》(Inception),该电影中有一句台词"We need to go deeper",这也成了深度学习社区的一种寓言,象征着构建更深、更复杂网络的需求。

总体来说,Inception网络通过其创新的模块设计、计算优化和正则化策略,不仅推动了卷积神经网络的发展,也在图像分类、物体检测等多个视觉任务中设立了新的性能标准。

高效的 MobileNet

移动端部署对模型的运算效率提出了更高要求。MobileNet 应运而生,采用了深度可分离卷积等技术来构建轻量化的高效网络。 MobileNet中的深度可分离卷积将标准卷积分解为两步:首先进行逐通道的深度卷积,再进行1x1卷积进行跨通道信息整合。相比于标准卷积同时进行空间卷积和跨通道操作,深度可分离卷积大大减少了计算量和参数量。

- 普通卷积操作:

- 假设输入图像尺寸为 $n\times n \times n_c$ ,其中 n_c 是通道数量。如果你用 $f\times f \times n_c$ 的滤波器去卷积它,就要进行 $f\times f\times n_c$ 个乘法操作。然后,你需要移动滤波器,以计算输出的下一个值。假设滤波器总数为$n_c’$,则输出维度将为 $n_{out}\times n_{out}\times n_c’$。

- 计算普通卷积操作的总计算量是通过乘以滤波器的数量、滤波器参数的数量和滤波器的位置数量来得到的。

- 深度可分离卷积:由深度卷积(depthwise convolution)和逐点卷积(pointwise convolution)两步组成。

- 深度卷积:在深度卷积中,我们只使用 $f\times f$的滤波器,而滤波器的数量等于输入的通道数量(n_c)。深度卷积的输出尺寸是 $n_{out}\times n_{out}\times n_c$。

- 逐点卷积:逐点卷积会将深度卷积的输出( $n_{out}\times n_{out}\times n_c$)与一个$1\times 1\times n_c$的滤波器进行卷积,以得到我们最终的输出$n_{out}\times n_{out}\times n_c’$。需要注意的是,逐点卷积的滤波器数量等于我们期望的输出通道数 $n_c’$。

另外,MobileNet使用了倒残差结构和线性瓶颈层来进一步提高效率。倒残差结构能够稳定网络训练,线性瓶颈层则降低了内存访问成本。随后的MobileNet V2在MobileNet的基础上,使用了更优的倒残差块设计和更有效的线性瓶颈层,进一步提升了性能。

MobileNet系列网络展示了通过精心设计,可以在保证效果的同时大幅提升运算效率,使得复杂的深度网络可以在移动端高效运行。这为深度学习在移动领域的广泛应用提供了可能。

EfficientNet - 根据设备自动调整

先前的工作,如MobileNet V1和V2,已经为我们展示了如何构建更加计算高效的模型。相比 MobileNet 采用固定结构,EfficientNet 提出了一种新的网络缩放方法,可以自动调整网络大小以适应不同的硬件平台。EfficientNet的核心思想是:同时缩放网络的深度、宽度和输入分辨率,可以获得更好的性能。其中,网络宽度是指层中滤波器的数量。

EfficientNet背后的基本思想是,不是单独地调整深度、宽度或分辨率,而是要综合地考虑它们。通过同时调整这三个维度,EfficientNet能够为每个特定的计算限制提供一个尽可能最优的模型。例如,对于一个拥有大量计算资源的强大服务器,EfficientNet可能会选择一个较大的模型,具有高分辨率的输入和多层的网络结构,从而实现最高的准确性。相反,对于一个计算能力有限的手机或嵌入式设备,它可能会选择一个较小、较浅、低分辨率的模型,以确保快速的运行速度。

这种方法的另一个优势是,它消除了为不同应用手工调整模型的需求。通常,为了找到适合特定设备或应用的最佳模型,研究者需要进行大量的实验和微调。但有了EfficientNet,只需要选择一个合适的缩放比例,模型就可以自动调整,为你提供最佳的性能。

EfficientNet不仅仅是一个理论上的想法。开源社区已经为这个框架提供了多种实现,这使得开发者可以轻松地为其应用和设备选择合适的模型大小。对于那些希望在移动设备、嵌入式系统或任何计算和内存资源有限的环境中部署深度学习模型的人来说,EfficientNet提供了一种非常有吸引力的解决方案。

EfficientNet基于模型缩放的思想,可以自动构建出在给定硬件条件下效果最优的模型。这种由硬件限制驱动的网络设计思路,使神经网络可以更好地服务于实际应用EfficientNet的设计理念为在不同的应用场景中,快速构建出最合适的模型提供了思路。这使得深度学习可以更容易地部署到各种硬件平台上。

迁移学习(Transfer Learning)

迁移学习是一种用于构建强大计算机视觉模型的方法,特别是在数据稀缺的情况下。通过利用已经在大规模数据集(如ImageNet、MSCOCO、PASCAL等)上预先训练好的网络权重和结构,我们可以将这些成果迁移到新的、特定的问题上。

迁移学习的主要步骤:

- 下载已经在大型数据库上训练好的网络结构和权重,例如ImageNet、MSCOCO、PASCAL等。

- 将下载的权重和网络结构用作自己神经网络的初始化起点。

- 根据自己的问题进行调整,如修改分类层或添加新的输出层。

- 冻结前面的层,只训练与自己问题相关的后面的层的参数。

- 如果数据集较小,可以预先计算前面层的激活结果并保存在硬盘上,以加速训练过程。

- 根据数据集的大小和计算资源,决定冻结的层数和训练的层数,更多数据可训练更多层。

- 使用迁移学习训练自己的网络,以获得较好的性能。

迁移学习在计算机视觉领域应用广泛,特别适合没有足够数据和计算资源的情况。通过利用已经训练好的权重和网络结构,可以快速构建高性能的计算机视觉应用,而无需从零开始训练。在许多深度学习框架中,支持冻结层和参数设置,以及预先计算激活结果的方法。

总之,迁移学习为计算机视觉应用提供了一种快速、高效的方式,可在有限的资源下获得良好的性能,同时避免从零开始训练网络。

数据增强(Data Augmentation)

数据增强是通过对原始数据应用各种变换(如旋转、裁剪、翻转等)以扩大数据集的一种方法。这些简单但有效的技术能提高模型的泛化能力。

常用的数据增强方法:

- 镜像操作:通过垂直或水平翻转图像。

- 随机裁剪:随机选择图像的一部分进行裁剪。

- 色彩变化:在RGB通道内添加随机噪声。

- 组合使用:多种方法可以组合使用,以增加数据的多样性。

对于大规模训练集,可以使用多线程加载和处理图像数据来提高效率。数据增强的超参数可以根据具体任务和需求进行调整。此外,使用开源实现的数据增强方法可以作为一个良好的起点。总的来说,数据增强可以提高模型的鲁棒性和泛化能力,改善计算机视觉应用的效果。

计算机视觉的当前挑战

计算机视觉是一门跨学科的领域,它尝试让计算机解析和理解从世界中获得的图像或视频数据。虽然近年来取得了显著的进展,但这个领域仍然面临着多个重要的挑战。

- 数据量的挑战:计算机视觉任务通常需要大量的数据来训练模型。尽管现有的数据集规模在不断增大,但对于复杂的问题,我们仍然觉得数据量不够。相比之下,其他领域并不像计算机视觉那样需要大量数据。这导致在训练计算机视觉模型时,数据增强常常是有帮助的。

- 人工设计的重要性:计算机视觉试图学习一个非常复杂的函数,因此人工设计在其中起着重要作用。当我们缺乏大量标记好的数据时,人工设计是获得良好效果的关键方法。计算机视觉领域仍然依赖于大量的人工设计,包括特征设计、网络结构设计和其他组件设计。

- 迁移学习的应用:迁移学习是一种在数据稀缺情况下非常有用的技术。它可以利用已经在大型数据集上预训练好的模型,并在特定任务上进行微调。迁移学习对于计算机视觉问题的解决非常有效,特别是当我们只有相对较少的数据时。

- 基准数据集的重要性:在计算机视觉研究中,很多人关注在基准数据集上获得好的结果,以及在比赛中取得胜利。在基准数据集上获得好成绩可以帮助我们了解哪些算法最有效。然而,这些方法在实际产品中并不一定适用,因为它们可能需要大量的计算资源和运行时间。

- 集成学习和多重剪切:集成学习是一种在基准数据测试中获得好结果的技术,但它在实际产品中往往不太实用。多重剪切是一种数据增强的形式,可以在测试图像上应用。它可以在一幅图像上进行多个裁剪,并将它们的结果平均,从而提高性能。

总之,深度学习在计算机视觉中有许多独特的应用和现状。人工设计仍然在其中起着重要作用,而迁移学习和数据增强等技术则可以帮助我们在数据稀缺的情况下取得更好的结果。理解计算机视觉架构和技术可以帮助我们构建有效的计算机视觉系统。

总结

综上,本文主要介绍了卷积神经网络比较经典的一些案例与核心思路,这些案例对理解卷积神经网络的设计与应用具有重要参考价值,当然本文写的都比较浅显,想要更深入的去学习还是建议大家去阅读一下原论文。

附录-相关文献

- LeCun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11): 2278-2324.

- Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks[J]. Advances in neural information processing systems, 2012, 25.

- Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[J]. arXiv preprint arXiv:1409.1556, 2014.

- He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2016: 770-778.

- Lin M, Chen Q, Yan S. Network in network[J]. arXiv preprint arXiv:1312.4400, 2013.

- Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2015: 1-9.

- Howard A G, Zhu M, Chen B, et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications[J]. arXiv preprint arXiv:1704.04861, 2017.

- Sandler M, Howard A, Zhu M, et al. Mobilenetv2: Inverted residuals and linear bottlenecks[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 4510-4520.

- Tan M, Le Q. Efficientnet: Rethinking model scaling for convolutional neural networks[C]//International conference on machine learning. PMLR, 2019: 6105-6114.