卷积神经网络入门

卷积神经网络(Convolutional Neural Network,简称CNN)是一类用于图像处理的深度神经网络。CNN借鉴生物视觉系统的结构,使用卷积运算提取图像的空间特征,再结合全连接层进行分类或预测。由于卷积运算的引入,CNN在图像处理方面表现卓越,被广泛应用于图像分类、目标检测、语义分割等任务中。本篇博客将基于Andrew Ng教授的深度学习专项课程第四门课程的第一周内容来针对卷积神经网络的基础知识进行简单的介绍。

从计算机视觉谈起

计算机视觉是深度学习的一个快速发展领域,可以用于自动驾驶汽车判断周围的车辆和行人,面部识别,以及为用户展示各种类型的图片,例如食物,酒店和风景图片。卷积神经网络被普遍用于图像处理,比如图像分类,目标检测,神经风格转换。图像分类是识别图像内容的过程,目标检测不仅要找出图片中的其他物体,还要确定他们的位置,神经风格转换则是将一张图片用另一种风格重绘。

计算机视觉问题的一个挑战是输入可以任意大。例如,使用1000x1000像素的图像,输入特征的维度将会是1000x1000x3,这就需要处理大量的数据,可能会导致神经网络过拟合,并且对计算量和内存的需求也很高。为了解决这个问题,需要更好地运用卷积运算,这是卷积神经网络的基础之一。

边缘检测

边缘检测是图像处理中一项基本任务,目的是找到图像中像素变化突然的区域,即对象的边界。这可以用于检测物体的形状轮廓。边缘检测可通过卷积运算实现。

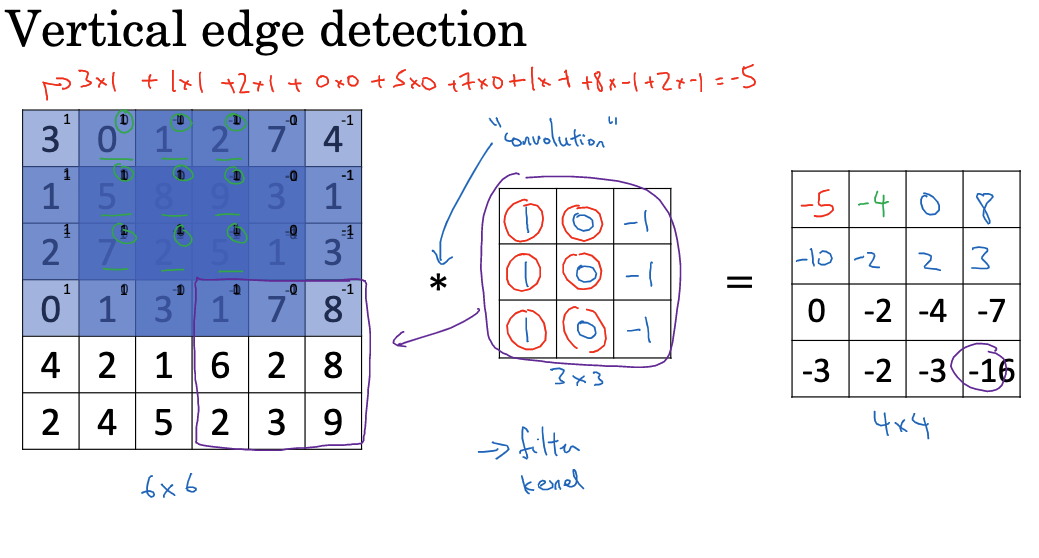

假设我们有一张6x6像素的灰度图像,现在想检测图像中的垂直边缘。可以构造一个大小为3x3的滤波器(也称卷积核),其权重矩阵为:

1 0 -1

1 0 -1

1 0 -1这个滤波器本质上是一个求导运算,可以检测出垂直方向的像素变化。我们将这个3x3滤波器在图像上滑动,计算每个位置的卷积。例如在最左上角时,该滤波器与图像的对应区域进行元素相乘并求和,得到的输出值为-5。重复这个过程,我们可以得到一个新的4x4的特征映射.

类似地,我们可以使用不同的滤波器检测水平边缘、45度边缘等。这种通过滤波器提取基本图像特征的过程是CNN中卷积层的主要作用。在实际应用中,CNN会学习到多组滤波器,同时检测图像的各个方向边缘,从而得到丰富的特征表示。

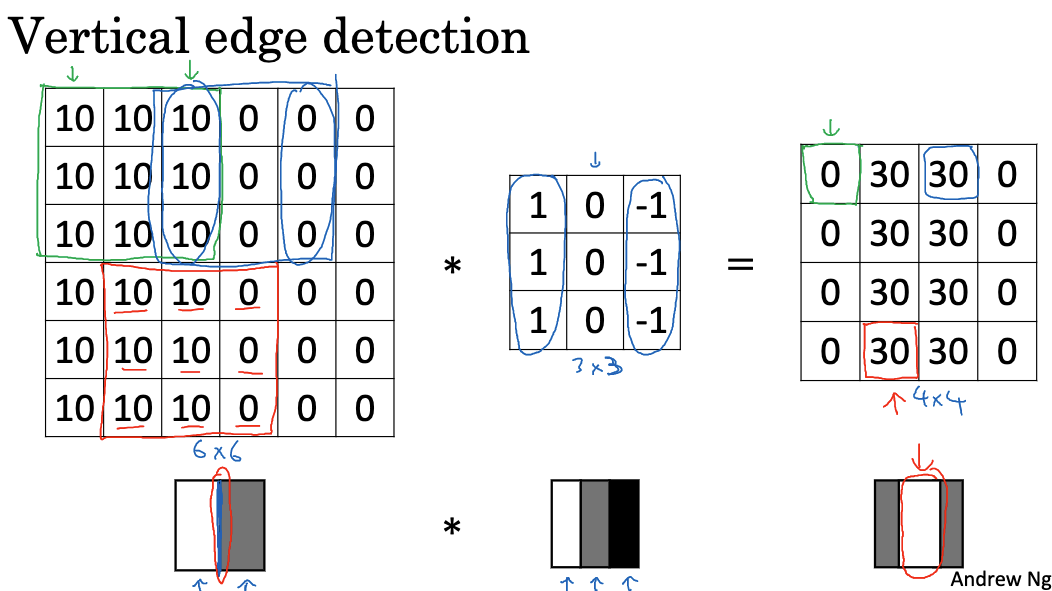

在实际编程中,不同的深度学习框架可能会有不同的卷积运算的实现。例如,在TensorFlow中,使用tf.nn.conv2d函数来实现卷积运算。卷积运算能够通过对图像应用特定的过滤器,帮助我们检测出图像中的垂直边缘。例如,如果我们的图像左边部分的像素值为10,右边部分的像素值为0,那么经过上述的卷积运算后,我们就可以在结果中看到一条明亮的垂直线,这条线就标志着原始图像中从亮区域到暗区域的垂直边缘。

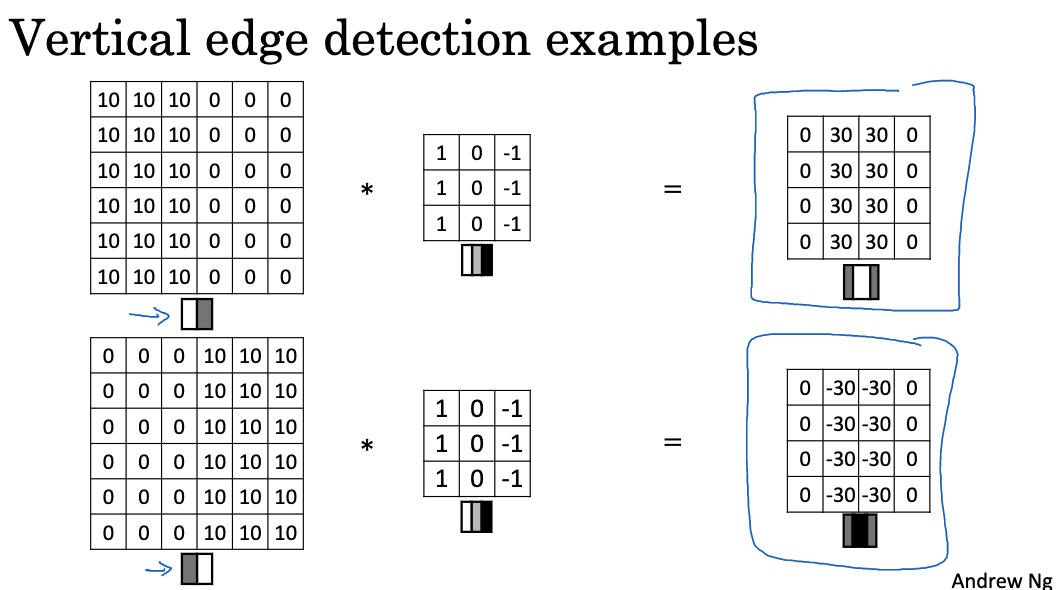

正边缘是指由亮变暗的边缘过渡,负边缘是指由暗变亮的边缘过渡。通过使用不同的边缘检测器,可以区分不同类型的边缘,并让算法自动学习边缘检测器,而不是手动设置。

如上图所示,将一个大小为6x6的图片与垂直边缘检测器进行卷积,得到中间部分的垂直边缘。如果颜色翻转,即左边变暗、右边变亮,结果将相反。原来的30变为-30,表示由暗变亮的边缘过渡。可以通过取输出矩阵的绝对值来忽略亮度变化的方向,但实际上,过滤器能够区分亮到暗的边界和暗到亮的边界。

垂直和水平边缘检测器都是使用3x3的过滤器来实现的,但具体使用哪些数字组合仍存在争议。Sobel过滤器和Scharr过滤器是常用的选择,它们在权重分配和性能特征上有所区别。

神经网络可以通过反向传播学习这些过滤器的参数,以更好地捕捉数据的统计特征。神经网络可以学习到底层特征,如边缘,甚至比手动选择的过滤器更稳定。反向传播可以学习任意需要的3x3过滤器,并应用于整个图片的任何位置,以检测所需的特征。通过将这9个数字作为参数学习,可以自动学习垂直边缘、水平边缘、倾斜边缘等各种边缘特征。

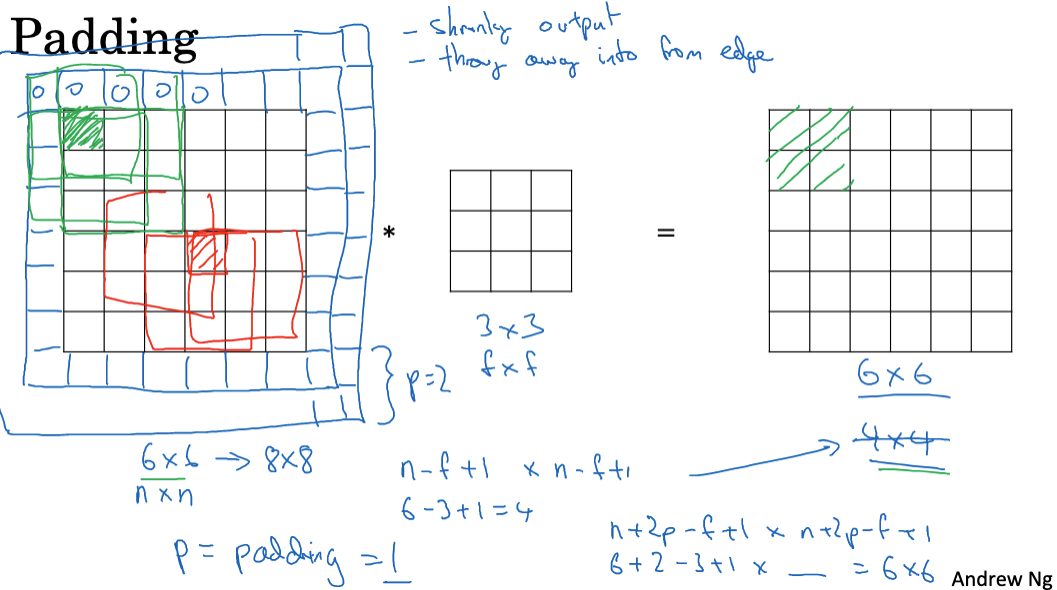

填充和卷积步长(Padding、Strided Convolutions)

在卷积运算中,当滤波器滑动到图像边缘时,要适当进行填充,以保证滤波器可以覆盖整个感受野。常见的填充方式有全零填充、对称填充等。全零填充是最简单的方法,直接在图像边界填充0。这会使得输出特征图的大小等于输入图片大小减去滤波器大小加1。

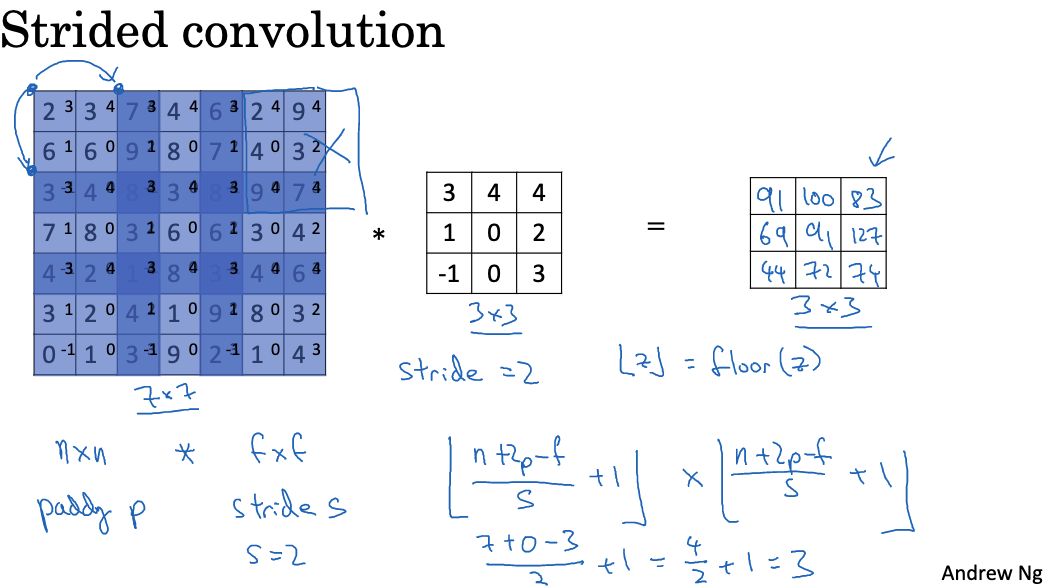

卷积层还可能使用步长(stride)参数来控制卷积核滑动的间隔,从而减小输出特征图的大小。

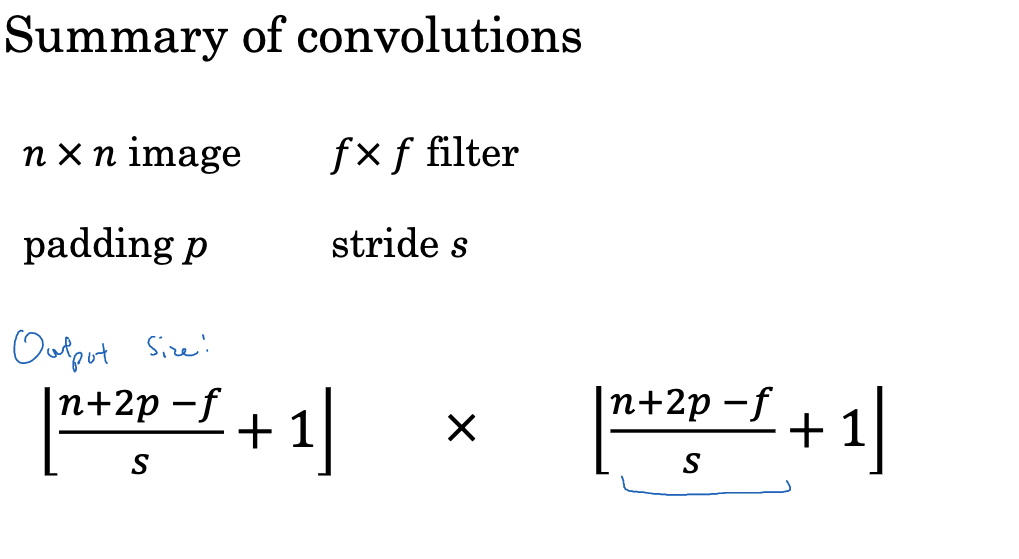

例如,在一个7x7的图像上使用大小为3x3的滤波器,如果步长设为1,则标准的卷积会得到一个5x5的输出特征图。但如果我们设置步长为2,则滤波器每次会跳过一个位置,最终得到的输出特征图大小为3x3。一般来说,如果输入大小为n x n,滤波器大小为f x f,使用Padding p 和步长s,那么输出特征图的大小可以计算为: $$ \frac{n + 2p - f}{s} + 1 $$ 步长的设置会明显影响输出特征图的大小。当使用大于1的步长时,由于滤波器跳过了位置,输出特征图相应缩小,从而减少了后续层的计算量。这在较深的网络层中经常被用来进行降维。但是步长不宜设置过大,否则容易导致信息丢失。特别是在浅层网络时,我们通常希望提取输入图片的全部特征,因此第一层卷积层的步长一般设为1。

在设计网络时,我们可以通过调整步长和填充来控制特征图在网络中间层的缩放比例。一种常见的设置是每次池化后,使用步长为2,填充为1的卷积层来抵消池化缩小的大小。需要注意的是,填充时增加的0值会在一定程度上影响特征提取的效果。所以填充层数不宜过多,通常为0或1。

此外,除了步长和填充外,还可以通过调整滤波器数量来改变输出通道数;通过使用不同大小的滤波器来检测不同范围的特征。综合运用这些技巧可以实现对卷积层输出特征图的精确控制。 注意,在执行卷积操作时,滤波器必须完全位于图像内或在填充区域内,如果滤波器超出图像范围,那部分的计算就不会执行。在某些数学和信号处理文献中,卷积操作在执行元素乘积和求和前,需要对滤波器在水平和垂直方向上进行翻转。然而在深度学习领域,我们通常不执行这个翻转操作。其实所谓的卷积正确的称呼应该是"交叉相关"(cross-correlation),但在深度学习领域,我们仍习惯称之为卷积。

三维卷积

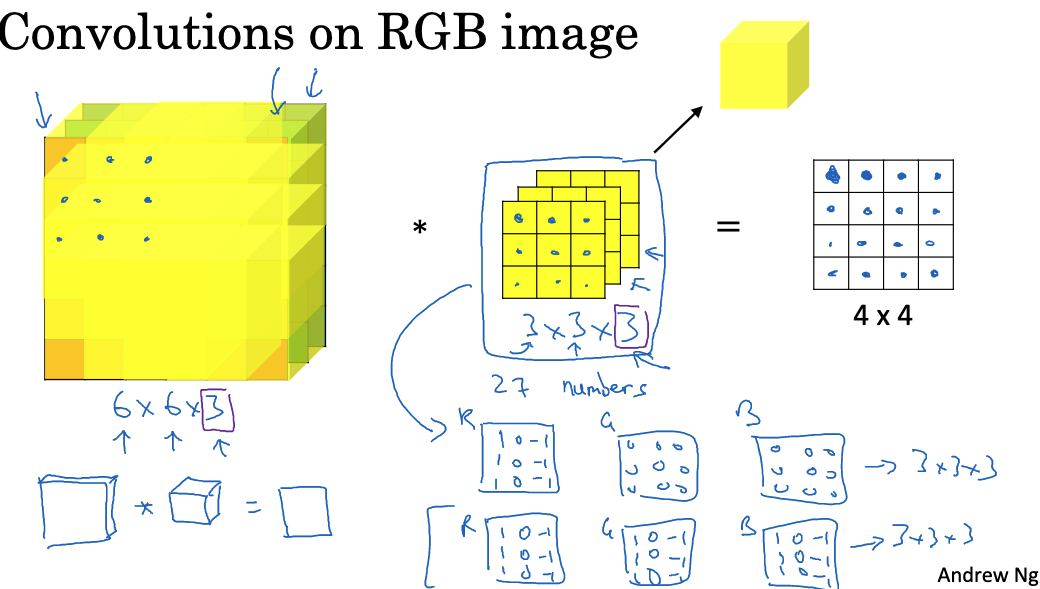

对于 RGB 图像,卷积运算需要在三个维度上进行,即宽度、高度和颜色通道。这需要使用三维的滤波器。

例如,对于一个大小为6 x 6 x 3 的RGB图像,我们可以定义一个大小为3 x 3 x 3的三维滤波器。这个滤波器同样有三个颜色通道R、G、B。在卷积运算中,滤波器中的每个值将与图像中对应的像素位置和颜色通道的值进行相乘。将所有乘积结果求和就可以得到输出特征图中的一个像素值。重复这个过程,我们可以得到一个尺寸为4 x 4的二维输出特征映射。其中每个像素都包含了原图像在该位置的三个颜色通道的信息。之所以使用三维滤波器,是因为我们希望卷积运算可以在空间和颜色通道上同时提取特征。例如,某个滤波器可能只对绿色边缘敏感,而另一个滤波器可能对所有颜色的边缘敏感。使用三维卷积核可以学习到丰富的颜色特征。

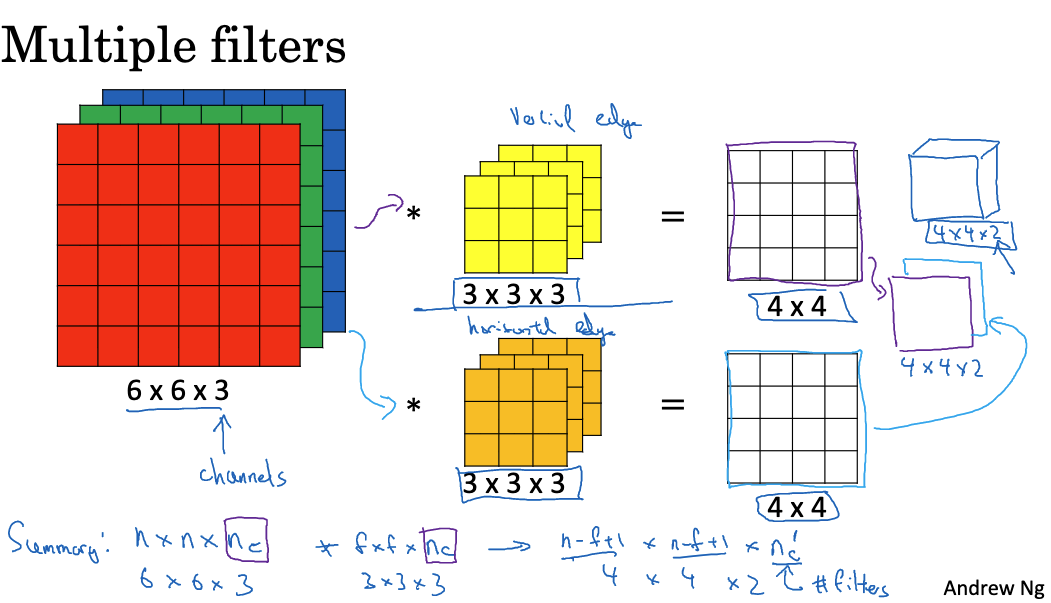

如果我们想要检测更多的特征(例如,垂直边缘和水平边缘),我们可以同时应用多个过滤器。每个过滤器都会生成一个输出图像,然后所有的输出图像可以叠加在一起,形成一个更高维度的输出。例如,如果我们用两个3x3x3的过滤器来对6x6x3的图像进行卷积,我们可以得到两个4x4的输出,然后将这两个输出叠加在一起,得到一个4x4x2的输出立方体。

卷积神经网络中的每一层都可以看作是在执行卷积操作。不同的过滤器代表着网络中的不同神经元,每个神经元都专门负责检测一种特定的特征。这种在立方体上进行卷积的方法不仅可以处理RGB图像,还可以检测任何数量的特征,使得神经网络能够处理更复杂的问题。

单层卷积网络

理解了卷积运算的过程后,我们来看看如何构建一个单层的卷积神经网络。

卷积层的工作流程可概括为四个步骤:

- 过滤器的应用:在卷积神经网络中,我们首先对输入进行卷积计算,即应用过滤器。这通常涉及对输入进行逐点乘法操作,并将结果相加得到一个新的值。这个过程对于每个过滤器都会重复,所以对于两个过滤器,我们会得到两个独立的结果。

- 添加偏差:接着,我们对每个过滤器的结果添加一个偏差。这个偏差是一个实数,对所有的元素都进行相同的添加。

- 非线性变换:之后,我们对每个加了偏差的结果进行非线性变换,比如ReLU激活函数。

- 获得最终结果:我们将所有处理后的结果合并在一起,得到一个新的多维度输出。例如,如果我们使用了两个过滤器,那么最后我们就会得到一个新的4x4x2的输出。

在整个过程中,每个过滤器都类似于神经网络中的权重矩阵,用于对输入进行线性变换。加上偏差和非线性变换后,我们就得到了相当于神经网络中经过激活函数处理后的结果。

此外,如何计算单层卷积神经网络的参数数量。这取决于过滤器的数量和大小。例如,对于一个3x3x3的过滤器,它有27个参数(对应于其体积),再加上一个偏差,所以总共有28个参数。如果网络中有10个这样的过滤器,那么就有280个参数。

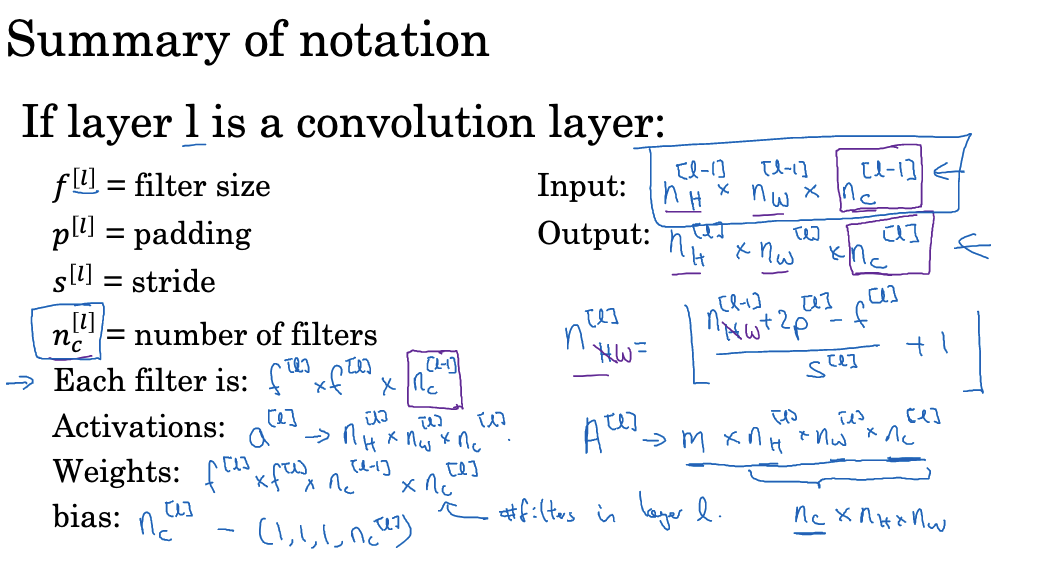

下图是CNN中我们一些符号标识的总结,大家可以记一下。

值得注意的是,卷积神经网络的一个重要特性就是参数共享,这意味着无论输入图像的大小如何,网络的参数数量都是固定的。这有助于减少过拟合的风险,因为相比于图像的尺寸,参数数量较小。

简单的卷积网络示例

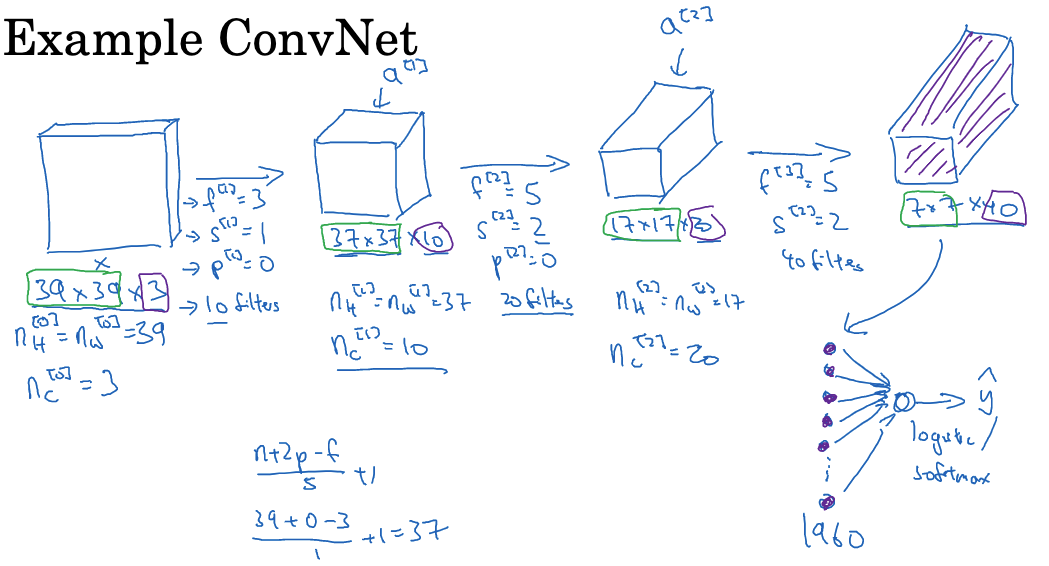

下面我们看一个深度卷积神经网络的一个实例,这种神经网络可用于图像分类和图像识别等任务。

- 这个网络的输入是一张39 x 39 x 3的图像,目标是判断图像是否包含猫。

- 神经网络的构造:

- 第一层:使用10个3x3的过滤器(没有填充,步长为1)进行卷积操作,输出尺寸为37 x 37 x 10。

- 第二层:使用20个5x5的过滤器(没有填充,步长为2)进行卷积操作,输出尺寸为17 x 17 x 20。

- 第三层:使用40个5x5的过滤器(没有填充,步长为2)进行卷积操作,输出尺寸为7 x 7 x 40。

- 最后,将得到的7 x 7 x 40特征映射展平成1960个单元的向量,然后输入到逻辑回归或softmax单元进行分类。

在设计卷积神经网络时需要选择的超参数包括总单元数、步长、填充和过滤器数量等。随着网络深度的增加,图像的高度和宽度通常会逐渐变小,而通道数则会逐渐增加。在典型的卷积神经网络中,除了卷积层(Conv)外,还经常使用池化层(Pool)和全连接层(FC)。这两种层比卷积层更简单。

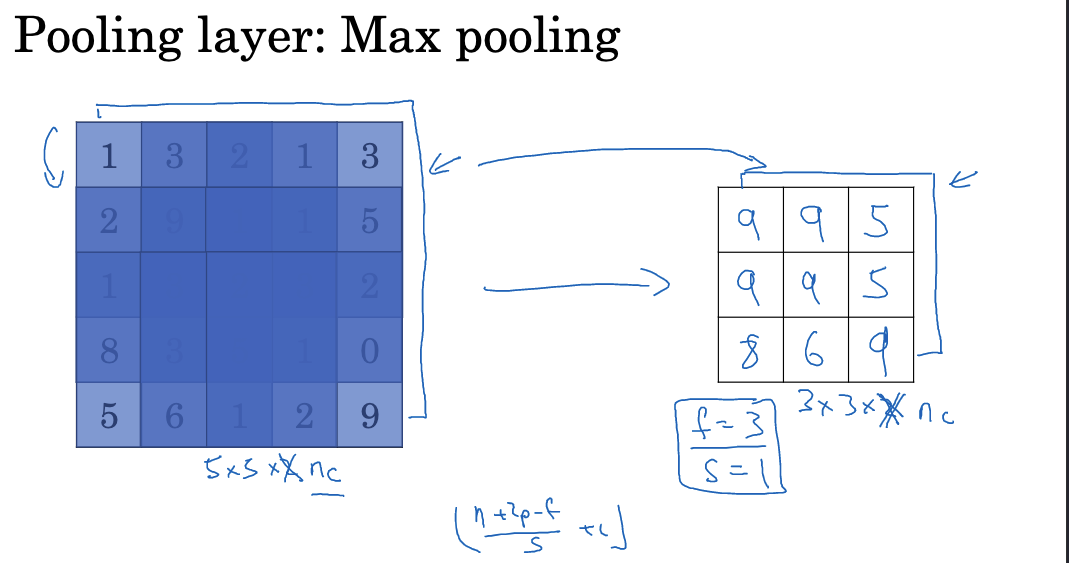

池化层

除了卷积层外,ConvNets通常还会使用池化层来减少特征图的大小。池化层在卷积神经网络中扮演重要角色,池化层的作用是减少表示的维度,提高计算速度,并增强某些特征的检测能力。常见的池化方法有最大池化、平均池化等。

例如在一个4x4的特征图上使用大小为2x2,步长为2的最大池化,会得到一个2x2的输出特征图。输出中的每个元素就是对应的2x2区域中的最大元素。最大池化的机制是选择每个区域中的最大值作为输出。如果某个特定特征在滤波器的任何位置被检测到,则最大值将保留该特征。如果特征未被检测到,则该区域的最大值通常较小。因此,最大池化实际上是通过选择最大值来检测特征,并在输出中保留这些特征。

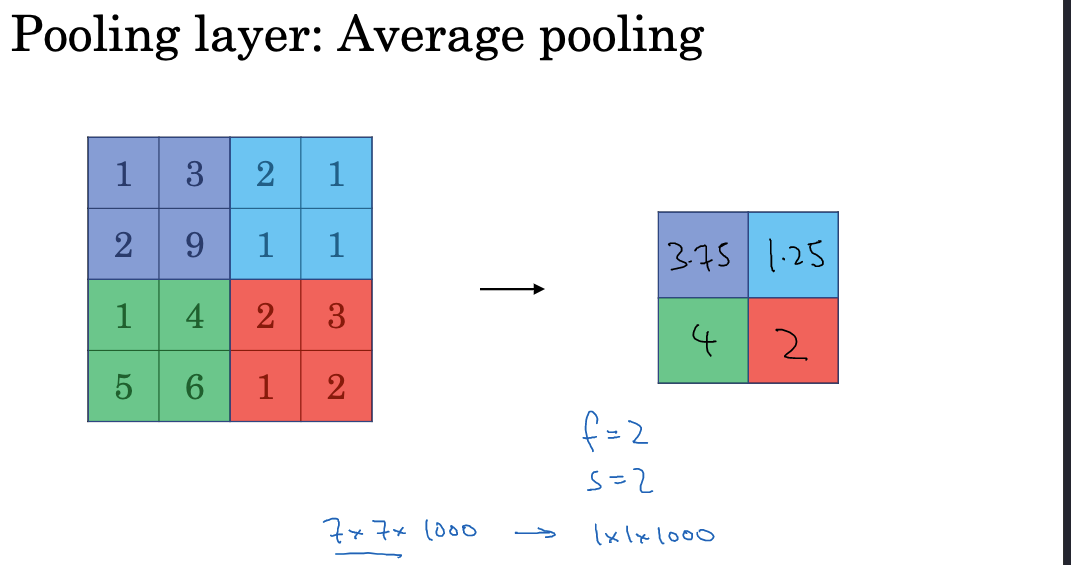

均值池化是另一种采样方法,其基本原理是在每个区域中取平均值。如下图所示:

最大池化提取主要特征的能力强,但也会丢失特征图中的细节信息。因此在设计时需权衡池化操作的强度。除了减小特征图大小外,池化层还不包含任何可学习的参数。这进一步优化了网络结构,降低了过拟合风险。需要注意的是,池化层会破坏特征图中的空间关系,如果过度使用会导致空间信息流失,因此通常在网络中间层进行池化。此外,池化核大小也需要谨慎选择。过小会限制特征提取,过大会丢失细节。

池化层的超参数包括滤波器大小(f)和步长(s)。常见的超参数选择是f=2和s=2,这样可以将高度和宽度的表示减少一半以上。另一个常用的超参数选择是f=2和s=2,这样可以将高度和宽度的表示缩小一倍。

一个完整的卷积神经网络示例

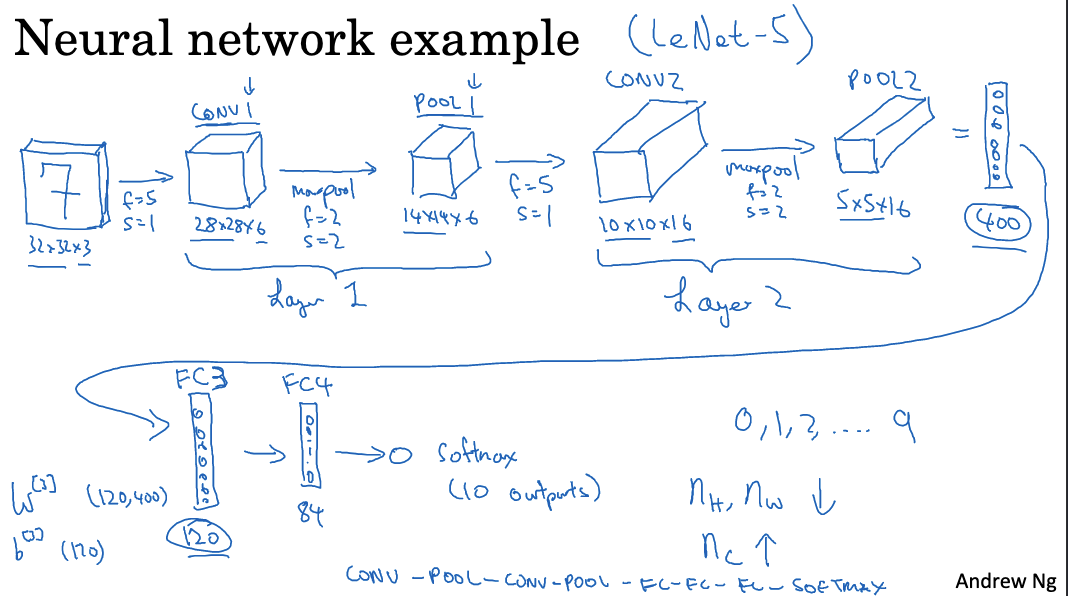

下面我们看一个使用卷积层、池化层和全连接层的卷积神经网络完整示例:

- 在这个例子中,我们使用LeNet-5作为卷积神经网络的启发,但与LeNet-5不完全相同,仍受其启发。

- 输入图像的大小为32x32x3(RGB图像)。

- 第一层是卷积层1,使用5x5的过滤器、步长为1和6个过滤器。输出大小为28x28x6。

- 接下来是池化层1,使用2x2的最大池化,步长为2。输出大小为14x14x6。

- 然后是卷积层2,使用5x5的过滤器、步长为1和10个过滤器。输出大小为10x10x10。

- 再进行池化层2,使用2x2的最大池化,步长为2。输出大小为5x5x10。

- 接下来是卷积层3,使用5x5的过滤器、步长为1和16个过滤器。输出大小为1x1x400。

- 将池化层2的输出展开成一个400x1的向量。

- 然后是全连接层4,具有120个单元。

- 最后一层是全连接层5,具有84个单元。

- 最终输出层是具有10个单元的Softmax层,用于手写数字识别任务。

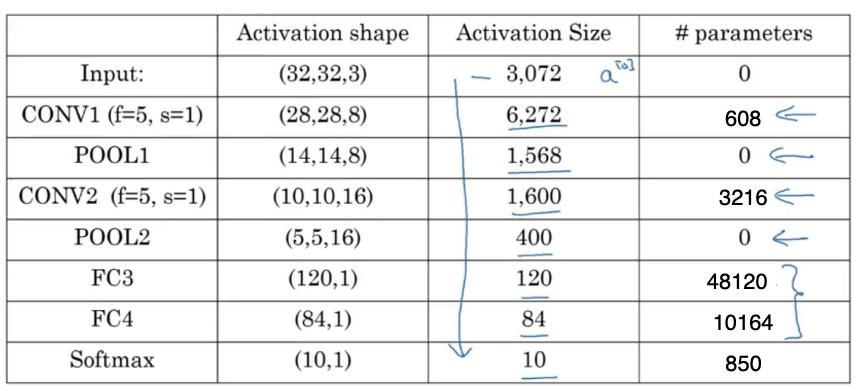

关于网络的一些细节:

- 输入的激活尺寸是32x32x3,对应于3072个激活输入。

- 各层的激活输入尺寸和大小如表格所示。

- 卷积层的参数数量相对较少,全连接层的参数数量较多。

- 随着网络的深入,激活输入的大小逐渐减小,通道数量逐渐增加。

- 常见的网络模型是交替使用卷积层和池化层,并在最后叠加全连接层和Softmax层。

- 神经网络的层数通常指有权重和参数的层数,池化层通常不计入层数。

建议选择超参数的方法:

- 不要试图创造自己的超参数组,而是查阅文献,了解其他人使用的超参数组合。

- 选择适用于其他人应用的超参数组合,很可能也适用于您的应用。

- 随着网络的深入,通常会减小高度和宽度,增加通道数量。

- 在选择超参数时,可以参考常见的参数组合,如使用f=2和s=2的池化层。

卷积网络的设计需要考虑特征提取、分类判断和优化约束多方面因素。随着各类模型设计的不断发展,卷积神经网络在计算机视觉与图像处理领域表现优异,值得探究其设计思路。

卷积层的优势

相比于全连接层,卷积层具有以下两个重要优势:

- 参数共享 在同一个卷积层中,所有感受野使用同样的滤波器权重矩阵。这意味着一个滤波器在整个输入图像上滑动时,使用的是共享的参数。这大大减少了参数量。

比如输入图像为1000x1000x3,使用100个10x10x3的滤波器,不共享参数时有100x10x10x3=30万个参数,而共享参数只有100x10x10x3=3万个参数,降低了一个数量级。

参数共享不仅减少存储空间,也大大减轻了模型优化负担。每轮参数更新时也只需更新滤波器的一组权重即可。

- 稀疏连接 连接的稀疏性指的是每个输出值只与输入的一小部分相关联,其他输入对输出没有影响,从而减少了连接的数量。

在卷积层中,每个神经元只与输入中的一块局部区域连接,不是全连接。例如10x10的滤波器每个神经元只连接到10x10个输入,大大减少了参数量。这种稀疏连接保证了学习的参数能更好检测局部特征,更好提取输入的空间信息。 稀疏连接也减轻了过拟合问题。卷积层每个神经元仅响应局部特征,整体特征组合而成,不太容易产生高方差。

卷积神经网络可以捕捉平移不变性,即对于平移后的图像,它可以产生相似的特征,并给出相同的标签。

训练卷积神经网络的步骤包括随机初始化参数,计算成本函数,然后使用梯度下降或其他优化算法来优化参数,以降低成本函数的值。

实际应用中,可以借鉴已经发表的研究论文中的卷积神经网络结构,并在自己的应用程序中使用它们。

十、总结

通过这些内容的介绍,相信大家对卷积神经网络的工作原理和组成模块有了初步的了解。卷积层在保持图像空间信息的同时大幅减少了参数数量,这是CNN能够成功处理图像任务的关键所在。

此外,卷积神经网络也存在一些局限性,如对非网格数据的适用性较差,层间连接不如全连接方便等。因此,使用CNN需要考虑问题的本质特征,并与其他网络结构适当配合,才能发挥最大效果。

卷积神经网络已在计算机视觉与图像处理等领域得到广泛应用,但其设计优化还需要持续研究创新。期待看到CNN结构设计的进一步发展,使其能更好解决实际的复杂问题。