超参数调优、批量归一化以及深度学习框架

本篇博客的内容主要是超参数调优,批量归一化以及常见的深度学习框架,也是深度学习专项课程第二门课的最后一周课程内容,Let’s Go!

超参数调优

在深度学习中,超参数调优是一个非常关键的过程。合适的超参数设置将直接影响深度学习模型的性能。本节将详细探讨深度学习中超参数调优的重要性、主要影响模型性能的超参数以及超参数选择的方法与策略。

超参数调优的重要性

超参数调优对深度学习模型性能有决定性的影响。合理的超参数设置将大大提高模型的训练效率、泛化能力以及测试效果。反之,不当的超参数选择也可能导致模型训练缓慢、无法有效学习、过拟合等问题。

具体来说,超参数调优的重要性体现在以下几个方面:

- 超参数直接影响模型复杂度,从而影响模型的学习能力。合适复杂度的模型既可以充分学习数据的模式,也可以避免过拟合。

- 不同的超参数会影响模型训练的速度。选择合理的批量大小、学习率等可以大大加速模型的训练。

- 超参数设置会影响模型收敛性。合理的超参数可以帮助模型更快更稳定地达到收敛状态。

- 超参数选择与正则化技术息息相关,将直接影响模型的泛化能力。

- 超参数将影响模型优化过程。不同的超参数组合将导致训练过程中的损失函数下降动态完全不同。

- 在深度学习领域,往往很难直接从理论上导出最优的超参数设置,只能依靠经验与实验结果。因此超参数调优更是一个实验性质的过程。

综上,超参数调优是深度学习中一个非常关键的实验性过程,它直接关乎到模型的最终性能。因此,我们必须通过不断的试验来寻找最优的超参数组合,这需要投入大量的时间和计算资源。但超参数调优的回报也十分可观,它可以大幅提升深度学习模型的效果。

主要影响模型性能的超参数

深度学习模型中包含了大量的超参数,但通常来说,并不是所有的超参数都会对模型性能产生很大影响。主要影响模型效果的关键超参数包括:

1. 学习率 学习率是深度学习模型训练中最重要的超参数之一。学习率决定了Gradient Descent优化算法在参数空间中移动的幅度。太小的学习率将导致训练缓慢甚至停滞;太大的学习率将导致训练动态不稳定。学习率需要设置在一个合理的范围内,通常通过试验找到最佳值。

2. 批量大小 批量大小决定每次前向和反向传播时抓取的样本数量。批量大小较小将导致噪声较大,但可以更频繁地更新参数;批量大小较大的情况下,单个批次的梯度估计的方差较小,这有助于网络更稳定地训练,但这也可能使训练过程需要更多的计算资源和时间。一般来说,批量大小设置为16、32、64、128等对大多数模型来说是合理的。

3. 权重初始化方法 不同的权重初始化方法会影响深度学习模型的训练速度与效果。常见的初始化方法包括随机初始化、Xavier初始化等。合理的初始化可以加速模型的收敛速度,并有助于避免梯度消失/爆炸问题。

4. 优化器的选择 深度学习中常用的优化器包括SGD、Momentum、RMSProp、Adam等。不同的优化器将导致训练过程中的损失函数下降动态差异很大。一般来说,SGD适用于简单的模型,而Adam等自适应学习率的优化器对复杂的深度模型更为有效。

5. 正则化强度 正则化技术(L1正则化、L2正则化、Dropout等)用来抑制过拟合,防止模型变得过于复杂。正则化强度决定了这种抑制的力度。正则化强度过小将导致过拟合,过大将导致欠拟合。一般需多次试验找到最佳正则化强度。

6. 网络层数与宽度 网络宽度指每层中的隐层单元数,网络层数指模型的层数。这两个超参数共同决定了网络的表示能力和复杂度。设置适当的网络宽度和层数能够容纳数据中的模式,防止欠拟合。

7. 学习率衰减策略 学习率衰减指随着训练迭代次数增加,逐渐减小模型的学习率。常见的学习率衰减策略包括步阶衰减、指数衰减等。合理的衰减策略可以加速模型收敛,并提升测试效果。

上述七个超参数可以认为是深度学习模型性能的关键影响因素。但实际上,不同的模型结构以及数据集的不同,会导致一些其他超参数也变得非常重要。因此,我们需要根据具体情况,选择对目标模型和数据集影响最大的几个超参数作为调优的重点。

如何选择超参数的值

超参数的选择通常需要基于实验和经验。以下是一些常用的策略:

- 网格搜索(Grid Search):在这种方法中,我们会为每个超参数设定一个值的范围,然后尝试所有可能的超参数组合。这种方法可以找到最优的超参数组合,但计算成本很高,特别是当超参数数量很多时。

- 随机搜索(Random Search):在这种方法中,我们会为每个超参数设定一个值的范围,然后随机选择超参数的值。这种方法的计算成本较低,当超参数的数量较多时,网格搜索可能只能探索到超参数空间的一小部分。而随机取样可以更有效地探索整个超参数空间,因此有更大的机会找到优秀的超参数设定。

一旦我们找到了一个表现良好的超参数设定,我们可以在这个设定附近进行更精细的搜索,这就是所谓的区域定位的抽样方案。具体来说,我们可以为每个超参数设定一个较小的范围,然后在这个范围内进行随机取样或网格搜索。这样可以在较少的试验次数中找到更好的超参数设定。

在选择超参数时,使用适当的刻度很重要。例如,学习率通常在0.0001到1之间,但这并不意味着我们应该在这个范围内均匀地选择学习率。因为学习率对模型性能的影响是对数刻度的,所以我们应该在对数刻度上选择学习率。一般来说,我们会选择一个初始的学习率,然后在每次迭代中,我们会乘以一个小于1的因子(例如0.9或0.99)。这样,学习率会逐渐减小,使得模型在初期可以快速学习,而在后期则可以更精细地调整权重。

实践中的超参数调优

超参数的选择对深度学习的结果有显著影响。这些选择在不同领域中可能有所不同,但也存在一些共通性。一些已经在一个领域中被验证过的超参数配置可能也适用于其他领域。这种跨领域借鉴的策略已成为深度学习领域的一种发展趋势。 在实际使用中,一种被定义为最优的超参数设置可能随着数据集的改变、硬件设施的更新等因素变为不再优秀。因此,建议至少每隔几个月重新检测或重新评估一次你认为最优的超参数。

在实践中有两种超参数探寻的模式:

- 熊猫模式(Panda mode):该模式更像一个精心照料单一模型的过程,尤其适合在资源受限的情况下使用。你可能只有足够的资源训练一个或者非常少量的模型,这时你需要紧盯模型的学习曲线,并不断调整学习率或其他超参数来优化模型的表现。

- 鱼子酱模式(Caviar mode):该模式适用于资源充足的情况,你可以同时并行训练很多模型,尝试不同的超参数设置,然后从中选择最终结果最好的超参数。

熊猫模式和鱼子酱模式的选择取决于你的计算资源。如果你有足够的计算机来并行训练很多模型,那么采用鱼子酱模式,尝试大量不同的超参数,看看结果如何。然而在一些应用领域,例如在线广告设置、计算机视觉识别等领域,可能需要处理海量的数据和大量的模型,这时,采用熊猫模式更为合适。

熊猫模式和鱼子酱模式的选择取决于你的计算资源。如果你有足够的计算机来并行训练很多模型,那么采用鱼子酱模式,尝试大量不同的超参数,看看结果如何。然而在一些应用领域,例如在线广告设置、计算机视觉识别等领域,可能需要处理海量的数据和大量的模型,这时,采用熊猫模式更为合适。

虽然一些超参数设置在不同的任务和领域中可能有所共通,但也要注意到,有些超参数可能具有更强的任务依赖性,如何平衡这种普适性和特异性,需要依赖实际的问题和经验。

批量归一化

批量归一化是深度学习中提出的一种重要技术,它可以加速网络训练并提高模型的泛化能力。本节将全面介绍批量归一化的工作机制、效果以及实际应用中的注意事项。

批量归一化的工作原理

为了加深理解,下面将从数学原理详细推导批量归一化的过程:

假设一个 mini-batch 中有 m 个样本,网络某层的输出为 $x_1, x_2, …, x_m$,我们先计算这批数据的均值与方差:

$$ \mu = \frac{1}{m} \sum_{i=1}^{m} x_i $$ $$ \sigma^2 = \frac{1}{m} \sum_{i=1}^{m} (x_i - \mu)^2 $$

然后用这两个统计量来进行归一化: $$ \hat{x}_i = \frac{x_i - \mu}{\sqrt{\sigma^2 + \epsilon}} $$

这里 $\epsilon$ 是为了数值稳定性加上的一个非常小的数。

到这里,数据已经被归一化为均值为0,方差为1的分布。但这会改变原始数据的表示范围。因此,我们需要一个逆变换来恢复数据的表示能力: $$ y_i = \gamma \hat{x}_i + \beta $$ 这里 $\gamma$ 和 $\beta$ 是可学习的参数,用来恢复数据原始的均值与方差。这样一来,我们就得到了保持表达能力的归一化数据。

上述是批量归一化在训练时使用的方法。在模型测试阶段,由于无法获得批量统计量,所以利用移动平均的方法,使用训练期间均值和方差的指数滑动平均来进行估计。

批量归一化为什么有效

批量归一化之所以对深度神经网络有效,主要有以下原因:

- 它可以减少模型对参数初始值的敏感度。网络中的权重参数初始化非常重要,但随机初始化难以确认最佳值。BN通过归一化输入,使网络对参数初始值不那么敏感。

- 它可以在一定程度上起到正则化的效果。利用批量统计产生的随机性有点类似于随机丢弃的效果,可以稍微抑制过拟合。

- 它可以显著加快网络的训练速度。归一化保证了各层分布的一致性,这样每个训练批量就能以更大的学习率训练。

- BN还可以一定程度上减轻梯度消失问题。因为每层输入值都被重新归一化为合理范围。

- 最后,BN允许使用较小的权重衰减来防止过拟合,从而进一步提升性能。

综上,批量归一化强大之处在于它同时具备加速训练、提升泛化能力、减轻梯度消失和正则化等多重功效。这些作用最终集成,使其成为提升深度神经网络性能的关键手段之一。

测试阶段的批量归一化

在训练时,我们使用一个批量的数据来计算均值和方差。但在测试时,我们通常一次只处理一个样本,这时就无法计算出均值和方差。为了解决这个问题,我们通常会在训练过程中保留一个运行时的均值和方差,然后在测试时使用这些运行时的统计量。

具体来说,我们会使用指数加权平均的方法来计算运行时的均值和方差:

$$ \mu_{running} = \alpha \mu_{running} + (1-\alpha)\mu_{batch} $$ $$ \sigma^2_{running} = \alpha \sigma^2+ (1-\alpha)\sigma^2_{batch} $$

其中,α 是一个接近1的小数(例如0.9或0.99),$\mu_{batch}$和 $\sigma^2_{batch}$ 是当前批量的均值和方差。通过这种方式,我们可以得到一个对整个训练数据分布的估计,然后在测试时使用这个估计来进行归一化。

批量归一化的应用建议

在实际使用批量归一化时,以下几点建议可以帮助发挥BN的最大功效:

- BN层通常放在全连接层或卷积层之后,激活函数之前。尽量不要把BN层置于网络的开始或结束。然而,要注意的是,对于ReLU这类非饱和性激活函数,将BN放在激活函数之后也是可行的,实践中这两种配置的效果往往都是可以接受的。

- 可以同时使用BN和Dropout等其他正则化方法,因为BN归一化输入分布,Dropout随机扰动表示。两者起到正交的正则化作用,要注意的是,因为BN的归一化效果,Dropout可能不会像在普通网络中那样有效,所以在使用两者的结合时可能需要进行额外的调整。

- 当数据集较小时,批量统计容易受单个batch的噪声影响较大。可以采用更大的batch size或利用持续集成的批量统计数据。

- BN参数的更新应放在网络参数更新之后,先计算必要的统计数据,再更新参数。

- 可以将BN过程封装为一个函数或计算模块,这样可以方便地在网络中任意位置调用。

- 对于小batch size,学习率要相应降低,因为BN的正则效应会弱一些。

多类别分类

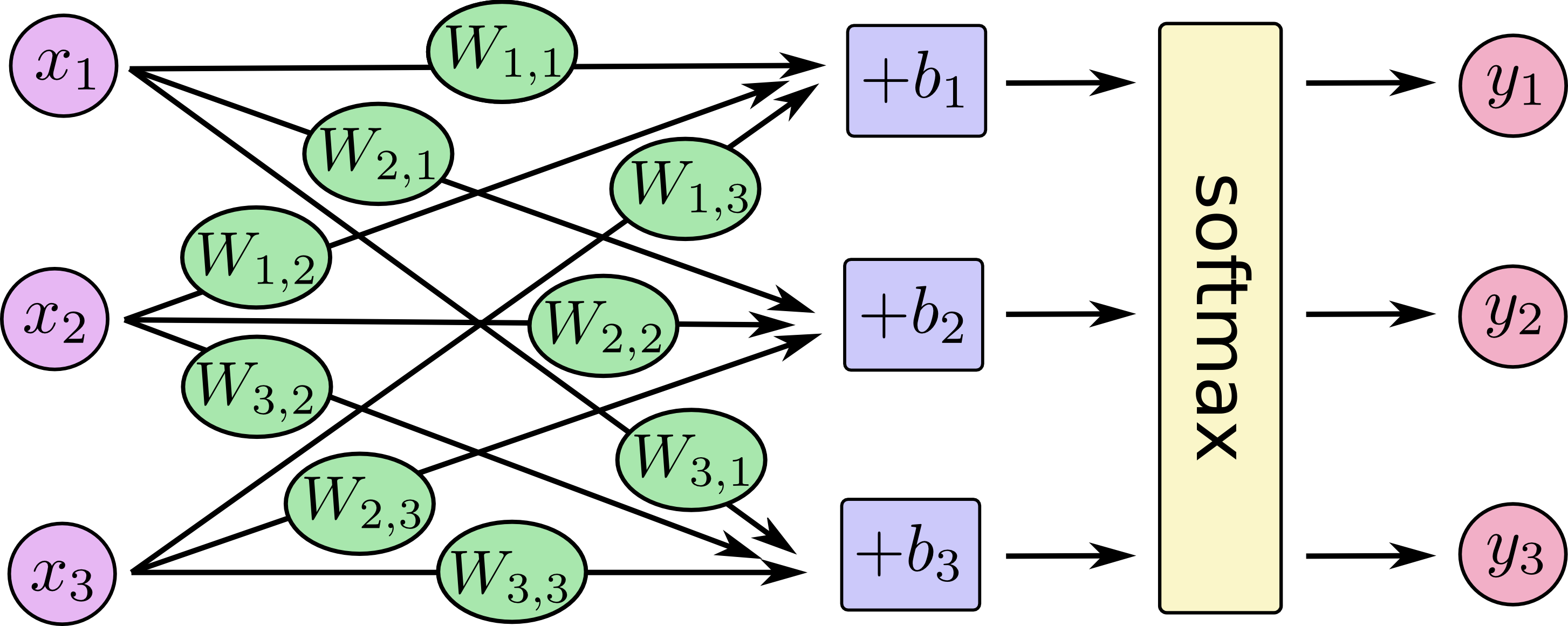

对于多类别分类问题,我们通常使用softmax回归模型。本节将详细介绍softmax回归的概念、原理、实现及应用技巧。

softmax回归概述

softmax回归是一种广义的逻辑回归,可以看作多分类问题的对数线性回归。它通过引入softmax函数,将网络输出值转换为各分类的概率,从而实现多类别分类。相比 One-vs-All 方法,softmax回归无需训练多个二分类器,直接可以得到各类的概率,因此更加简洁有效。softmax回归已成为多类分类问题的标准解决方案之一。

softmax回归原理

假设我们有一个包含m个样本的数据集,每个样本x属于n个类别之一。我们定义一个线性模型,它对每个样本输出一个包含n个元素的向量z,每个元素代表对应类别的“分数”。我们的目标是训练这个线性模型,使得对于样本x,模型输出的对应真实类别的分数最高。

为此,我们先通过softmax函数将z归一化为概率分布p:

$$ p_i = \frac{e^{z_i}}{\sum_{j=1}^n e^{z_j}} $$

其中 $p_i$ 是样本属于第i类的预测概率,$z_i$是线性模型给出的原始分数。

然后我们使用交叉熵损失函数衡量预测与真实标签之间的距离:

$$ J(\theta) = -\sum_{i=1}^m \sum_{j=1}^n y_{ij} \log(p_{ij}) $$

这里,如果样本i属于类j,则$y_{ij}=1$,其他为0。

最终,通过梯度下降算法优化损失函数,可以得到训练好的softmax回归模型。对新样本,我们计算它的分数向量z,并用softmax转换为概率,选择概率最大的类别作为预测结果。

softmax回归实现

利用Python和一些机器学习库(如TensorFlow),我们可以简单实现softmax回归。主要步骤包括:

- 构建计算图,输入特征x,输出分数向量z。这里z通常是两个全连接层,加上非线性激活。

- 对z应用softmax函数获得概率p。 Softmax计算可以用tf.nn.softmax()实现。

- 定义交叉熵损失函数,自动计算所有样本的loss。可以用tf.nn.sparse_softmax_cross_entropy_with_logits()。

- 设定优化器,迭代训练更新参数,最小化损失函数。常用的优化器有SGD、Adam等。

- 得到训练好的模型后,对新样本预测类别时,先经模型得到z,再softmax转换为概率p,选择概率最大的类别。

softmax回归应用技巧

softmax回归是一个简单有效的多类分类模型,以下技巧可以帮助提升其性能:

- 对分类数量较多的问题,可以采用层次softmax,先进行粗分类,再细分类。

- 通过关注易错类别加大其损失权重等手段,可以平衡各类别的识别效果。

- 联合使用softmax回归和其他评分模型,进行集成学习可以得到更好的分类性能。

- 通过可视化softmax模型的分类决策边界,可以直观判断分类的合理性,采取相应手段改进模型。

- softmax模型可以预训练语义特征表达,然后作为下游模型的基础,实现迁移学习。

- 注意最后一层要增加批量归一化层,可以避免梯度数值不稳定,起到正则化作用。

- 预测结果的置信度也很有用,可以用来过滤低置信度的预测或请求人工审核。

综上,softmax回归是一个既简单又有效的多分类模型,值得我们深入研究与应用,以解决更多实际问题。

深度学习框架简介

我们使用Python和NumPy可以从头开始实现深度学习的算法,理解算法的工作原理。但如果要实现复杂的深度学习模型,如卷积神经网络(CNN)和循环神经网络(RNN),都会非常困难。实际上,在深度学习领域,有许多优秀的开源框架,它们为研究人员和工程师提供了构建、训练和部署神经网络模型的工具。比如TensorFlow, PyTorch等。 那么如何选择深度学习的框架,以下几点很重要:

- 编程的简便性:对神经网络的开发和迭代改善,以及在生产环境中的部署都有好处。

- 运行速度:在大数据集上进行训练时,某些框架能让你更高效地运行和训练神经网络。

- 真正的开源性:一个真正的开源框架不仅需要开放源码,还需要良好的管理。你应该更信任那些长期会保持开源,而不是被单一公司所掌控的框架。 当然,有时候框架的选择还要取决于你的编程语言偏好和你构建应用的类型。选择合适的框架可以让你在开发机器学习应用时更加高效。

以上就是本次博客的全部内容,希望能对你有所帮助。欢迎大家提出意见哦。

熊猫模式和鱼子酱模式的选择取决于你的计算资源。如果你有足够的计算机来并行训练很多模型,那么采用鱼子酱模式,尝试大量不同的超参数,看看结果如何。然而在一些应用领域,例如在线广告设置、计算机视觉识别等领域,可能需要处理海量的数据和大量的模型,这时,采用熊猫模式更为合适。

熊猫模式和鱼子酱模式的选择取决于你的计算资源。如果你有足够的计算机来并行训练很多模型,那么采用鱼子酱模式,尝试大量不同的超参数,看看结果如何。然而在一些应用领域,例如在线广告设置、计算机视觉识别等领域,可能需要处理海量的数据和大量的模型,这时,采用熊猫模式更为合适。